Need a primer on how to build a CI/CD pipeline on GitHub? Check out our guide

6 strategic ways to level up your CI/CD pipeline

From incorporating accessibility testing to implementing blue-green deployment models, here are six practical and strategic ways to improve your CI/CD pipeline.

In today’s world, a well-tuned CI/CD pipeline is a critical component for any development team looking to build and ship high-quality software fast. But here’s the thing: It’s rare you’ll find two CI/CD pipelines that are exactly the same. And that’s by design. Every CI/CD pipeline should be built to meet a team’s specific needs.

Despite this, there are levels of maturity when building a CI/CD pipeline that range from basic implementations to more advanced automation workflows. But wherever you are on your CI/CD journey, there are a few things you can do to level up your CI/CD pipeline.

With that, here are six strategic things I often see missing from CI/CD pipelines that can help any developer or team advance and improve their workflows.

1. Add performance, device compatibility, and accessibility testing

Performance, device compatibility, and accessibility testing are often a manual exercise—and something that some teams are only partially doing. Manually testing for these things can slow down your delivery cycle, so many teams either eat the costs or just don’t do it.

But if these things are important to you—and they should be—there are tools that can be included in your CI/CD pipeline to automate the testing for and discovery of any issues.

Performance and device compatibility testing

One tool, for example, is Playwright which can do end-to-end testing, automated testing, and everything in between. You can also use it to do UI testing so you can catch issues in your product.

Visual regression testing

There’s another class of tools that can help you automate visual regression testing to make sure you haven’t changed the UI when you weren’t intending to do so. That means you haven’t introduced any unexpected UI changes. This can be super useful for device compatibility testing too. If something looks bad on one device, you can quickly correct it.

Accessibility testing

This is another incredibly impactful class of automated tests to add to your CI/CD pipeline. Why? Because every one of your customers should be valuable to you—and if even just a fraction of your customers have trouble using your product, that matters.

There are a ton of accessibility testing tools that can tell you things like if you have appropriate content for screen readers or if the colors on your website make sense to someone with color blindness. A great example is Pa11y, an open source tool you can use to run automated accessibility tests via the command line or Node.js.

2. Incorporate more automated security testing

Security should always be part of your software delivery pipeline, and it’s incredibly vital in today’s environments. Even still, I’ve seen a number of teams and companies who aren’t incorporating automated security tests in their CI/CD pipelines and instead treat security as something that happens after the DevOps process takes place.

Here’s the good news: There are a lot of tools that can help you do this without too much effort—including GitHub-native tools like Dependabot, code scanning, secret scanning, and if you’re a GitHub Enterprise user, you can bundle all the security functionality GitHub offers and more with GitHub Advanced Security. But even with a free GitHub account, you still can use Dependabot on any public or private repository, and code scanning and secret scanning are available on all public repositories, too.

Dependabot, for example, can help you mitigate any potential issues in your dependencies by scanning them for outdated packages and automatically creating pull requests for teams to fix them. It can also be configured to automatically update any project dependencies, too.

This is super impactful. Developers and teams often don’t update their dependencies because of the time it takes—or, sometimes they even just forget to update their dependencies. Dependencies are a legitimate source of vulnerabilities that are all too often overlooked.

Additionally, code scanning and secret scanning are offered on the GitHub platform and can be built into your CI/CD pipeline to improve your security profile. Where code scanning offers SAST capabilities that show if your code itself contains any known vulnerabilities, secret scanning makes sure you’re not leaking any credentials to your repositories. It can also be used to prevent any pushes to your repository if there are any exposed credentials.

The biggest thing is that teams should treat security as something you do throughout the SDLC—and, not just before and after something goes to production. You should, of course, always be checking for security issues. But the earlier you can catch issues, the better (hello DevSecOps). So including security testing within your CI/CD pipeline is an essential practice.

3. Build a phased testing strategy

Phased testing is a great strategy for making sure you’re able to deliver secure software fast and at scale. But it’s also something that takes time to build. And consequently, a lot of teams just aren’t doing it.

Often, developers will put all or most of their automated testing at the build phase in their CI/CD pipelines. That means the build can take a long time to execute. And while there’s nothing necessarily wrong with this, you may find that it takes longer to get feedback on your code.

With phased testing, you can catch the big things early and get faster feedback on your codebase. The goal is to have a quick build that rapidly tests the fundamentals with simpler tests such as unit tests. After this, you may then perhaps deploy your build to a test environment to execute additional tests such as some accessibility testing, user testing, and other things that may take longer to execute. This means you’re working your way through a number of possible issues starting with the most critical elements first.

As you get closer to production in a phased testing model, you’ll want to test more and more things. This will likely include key items such as regression testing to make sure previous bugs aren’t reappearing in your codebase. At this stage, things are less likely to go wrong. But you’ll want to effectively catch the big things early and then narrow your testing down to ensure you’re shipping a very high-quality application.

Oh, and of course, there’s also testing in production, which is its own thing. But you can incorporate post-deployment tests into your production environment. You may have a hypothesis you want to test about if something works in production and execute tests to find out. At GitHub, we do this a lot by releasing new features behind feature flags and then enabling that flag for a subset of our user base to collect feedback.

4. Invest in blue-green deployments for easier rollouts

When it comes to releasing a new version of an application, what’s one word you think of? For me, the big word is “stress” (although “excitement” and “relief” are a close second and third). Blue-green deployments are one way to improve how you roll out a new version of an application in your CI/CD pipeline, but it can also be a bit more complex, too.

In the simplest terms, a blue-green deployment involves having two or more versions of your application in production and slowly moving your users from an older version to a newer one. This means that when you need to update or deploy a new version of an application, it goes to an “unused” production environment, and you can slowly move your users across safely.

The benefit of this is you can quickly roll back any changes by redirecting users to another prod environment. It also leads to drastically reduced downtime while you’re deploying a new application version. You can get everything set up in the environment and then just point people to a new one.

Blue-green deployments are perfect when you have two environments that are interchangeable. In reality with larger systems, you may have a suite of web servers or a number of serverless applications running. In practice, this means you might be using a load balancer that can distribute traffic across multiple locations. The canonical example of a load balancer is nginx—but every cloud has its own offerings (like Azure Front Door or Elastic Load Balancing on AWS).

This kind of strategy is common among organizations using Kubernetes. You may have a number of pods that are running and when you do a deployment, Kubernetes will deploy updates to new instances and redirects traffic. The management of which ones are up and running operates under the same principles as blue-green deployments—but you’re also navigating a far more complex architecture.

5. Adopt infrastructure-as-code for greater flexibility

Infrastructure provisioning is the practice of building IT infrastructure as you need it—and some teams will adopt infrastructure-as-code (IaC) in their CI/CD pipelines to provision resources automatically at specific points in the pipeline.

I strongly recommend doing this. The goal of IaC is that when you’re deploying your application, you’re also deploying your infrastructure. That means you always know what your infrastructure looks like in production, and your testing environment is also replicable to what’s in production.

There are two benefits to building IaC into your CI/CD pipeline:

- It helps you make sure that your application and the infrastructure it runs on are routinely being tested in tandem. The old school way of doing things was to say that this is a production machine and it looks like this—and this is our testing machine and we want it to be as close to production as possible. But almost always, you’ll find that production environments change over time—and it makes it harder to know what your production environment is.

-

It helps you mitigate any real-time issues with your infrastructure. That means if your production server goes down, it’s not a disaster—you can just re-deploy it (and even automate your redeployment at that).

Last but not least: building IaC into your CI/CD pipeline means you can more effectively do things like blue-green deployments. You can deploy a new version of an application—code and infrastructure included—and reroute your DNS to go to that version. If it doesn’t work, that’s fine—you can quickly roll back to your previous version.

6. Create checkpoints for automated rollbacks

Ideally, you want to avoid ever having to roll back a software release. But let’s be honest. We all make mistakes and sometimes code that worked in your development or test environment doesn’t work perfectly in production.

When you need to roll back a release to a previous application version, automation makes it much easier to do so quickly. I think of a rollback as a general term for mitigating production problems by reverting to a previous version, whether that’s redeploying or restoring from backup. If you have a great CI/CD pipeline, you can ideally fix a problem and roll out an update immediately—so you can avoid having to go to a previous app version.

Looking for more ways to improve your CI/CD pipeline?

Try exploring the GitHub Marketplace for CI/CD and automation workflow templates. At the time I’m writing this, there are more than 14,000 pre-built, community-developed CI/CD and automation actions in the GitHub Marketplace. And, of course, you can always build your own custom workflows with GitHub Actions.

Additional resources

- [Blog] How to build a CI/CD pipeline on GitHub in four steps

- [Blog] 5 DevOps tips to speed up your developer workflow

- [Blog] A beginner’s guide to CI/CD and automation on GitHub

Tags:

Written by

Related posts

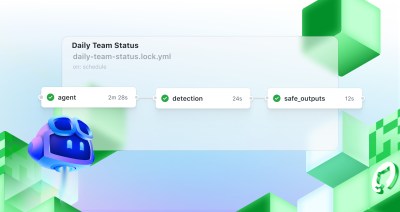

Automate repository tasks with GitHub Agentic Workflows

Discover GitHub Agentic Workflows, now in technical preview. Build automations using coding agents in GitHub Actions to handle triage, documentation, code quality, and more.

Level up design-to-code collaboration with GitHub’s open source Annotation Toolkit

Prevent accessibility issues before they reach production. The Annotation Toolkit brings clarity, compliance, and collaboration directly into your Figma workflow.

How to use the GitHub and JFrog integration for secure, traceable builds from commit to production

Connect commits to artifacts without switching tools.