Research: quantifying GitHub Copilot’s impact on developer productivity and happiness

When the GitHub Copilot Technical Preview launched just over one year ago, we wanted to know one thing: Is this tool helping developers? The GitHub Next team conducted research using a combination of surveys and experiments, which led us to expected and unexpected answers.

Everyday, we use tools and form habits to achieve more with less. Software development produces such a high number of tools and technologies to make work efficient, to the point of inducing decision fatigue. When we first launched a technical preview of GitHub Copilot in 2021, our hypothesis was that it would improve developer productivity and, in fact, early users shared reports that it did. In the months following its release, we wanted to better understand and measure its effects with quantitative and qualitative research. To do that, we first had to grapple with the question: what does it mean to be productive?

Why is developer productivity so difficult to measure?

When it comes to measuring developer productivity, there is little consensus and there are far more questions than answers. For example:

- What are the “right” productivity metrics? [1, 2]

- How valuable are self-reports of productivity? [3]

- Is the traditional view of productivity—outputs over inputs—a good fit for the complex problem solving and creativity involved in development work? [4].

In a 2021 study, we found that developers’ own view of productivity has a twist–it’s more akin to having a good day. The ability to stay focused on the task at hand, make meaningful progress, and feel good at the end of a day’s work make a real difference in developers’ satisfaction and productivity.

This isn’t a one-off finding, either. Other academic research shows that these outcomes are important for developers [5] and that satisfied developers perform better [6, 7]. Clearly, there’s more to developer productivity than inputs and outputs.

How do we think about developer productivity at GitHub?

Because AI-assisted development is a relatively new field, as researchers we have little prior research to draw upon. We wanted to measure GitHub Copilot’s effects, but what are they? After early observations and interviews with users, we surveyed more than 2,000 developers to learn at scale about their experience using GitHub Copilot. We designed our research approach with three points in mind:

- Look at productivity holistically. At GitHub we like to think broadly and sustainably about developer productivity and the many factors that influence it. We used the SPACE productivity framework to pick which aspects to investigate.

- Include developers’ first-hand perspective. We conducted multiple rounds of research including qualitative (perceptual) and quantitative (observed) data to assemble the full picture. We wanted to verify: (a) Do users’ actual experiences confirm what we infer from telemetry? (b) Does our qualitative feedback generalize to our large user base?

- Assess GitHub Copilot’s effects in everyday development scenarios. When setting up our studies, we took extra care to recruit professional developers, and to design tests around typical tasks a developer might work through in a given day.

Let’s dig in and see what we found!

Finding 1: Developer productivity goes beyond speed

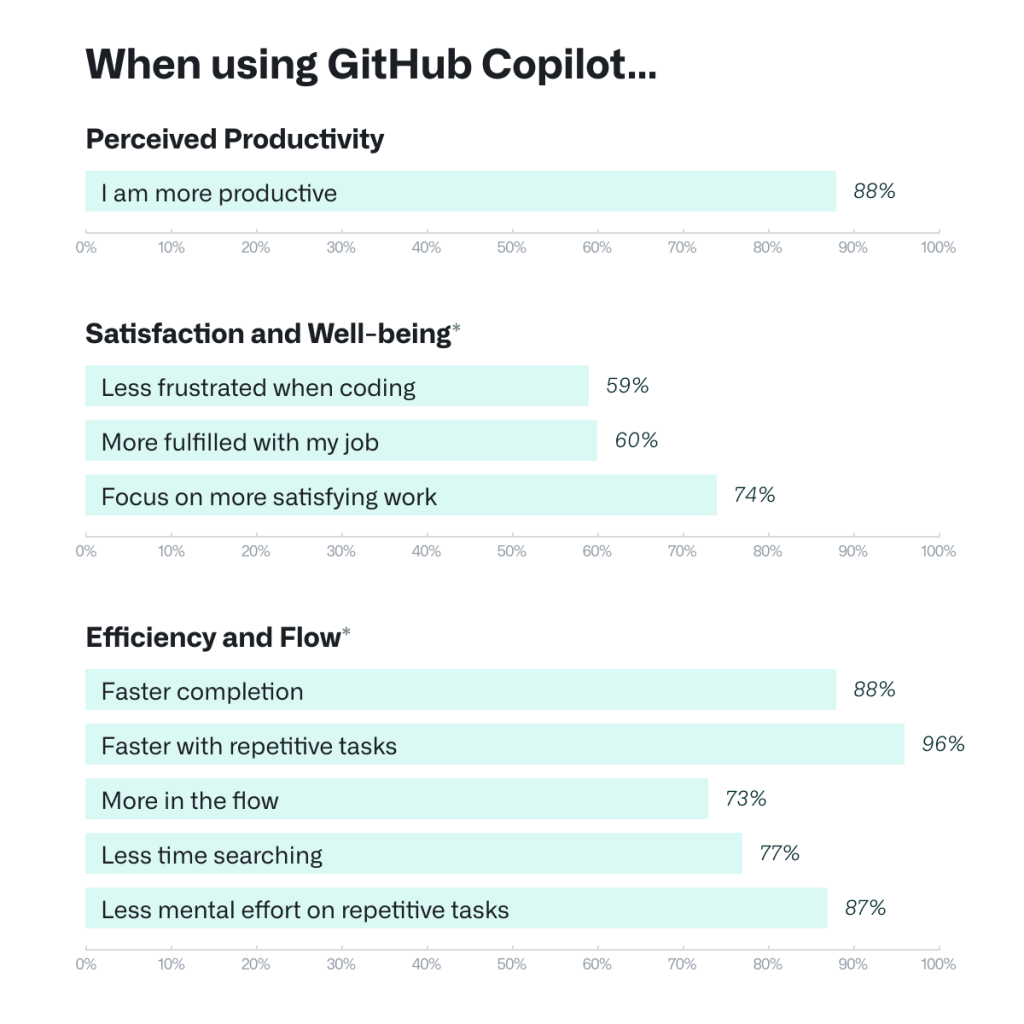

Through a large-scale survey, we wanted to see if developers using GitHub Copilot see benefits in other areas beyond speeding up tasks. Here’s what stood out:

- Improving developer satisfaction. Between 60–75% of users reported they feel more fulfilled with their job, feel less frustrated when coding, and are able to focus on more satisfying work when using GitHub Copilot. That’s a win for developers feeling good about what they do!

- Conserving mental energy. Developers reported that GitHub Copilot helped them stay in the flow (73%) and preserve mental effort during repetitive tasks (87%). That’s developer happiness right there, since we know from previous research that context switches and interruptions can ruin a developer’s day, and that certain types of work are draining [8, 9].

Table: Survey responses measuring dimensions of developer productivity when using GitHub Copilot

All questions were modeled off of the SPACE framework.

Developers see GitHub Copilot as a productivity aid, but there’s more to it than that. One user described the overall experience:

(With Copilot) I have to think less, and when I have to think it’s the fun stuff. It sets off a little spark that makes coding more fun and more efficient.

The takeaway from our qualitative investigation was that letting GitHub Copilot shoulder the boring and repetitive work of development reduced cognitive load. This makes room for developers to enjoy the more meaningful work that requires complex, critical thinking and problem solving, leading to greater happiness and satisfaction.

Finding 2: … but speed is important, too

In the survey, we saw that developers reported they complete tasks faster when using GitHub Copilot, especially repetitive ones. That was an expected finding (GitHub Copilot writes faster than a human, after all), but >90% agreement was still a pleasant surprise. Developers overwhelmingly perceive that GitHub Copilot is helping them complete tasks faster—can we observe and measure that effect in practice? For that we conducted a controlled experiment.

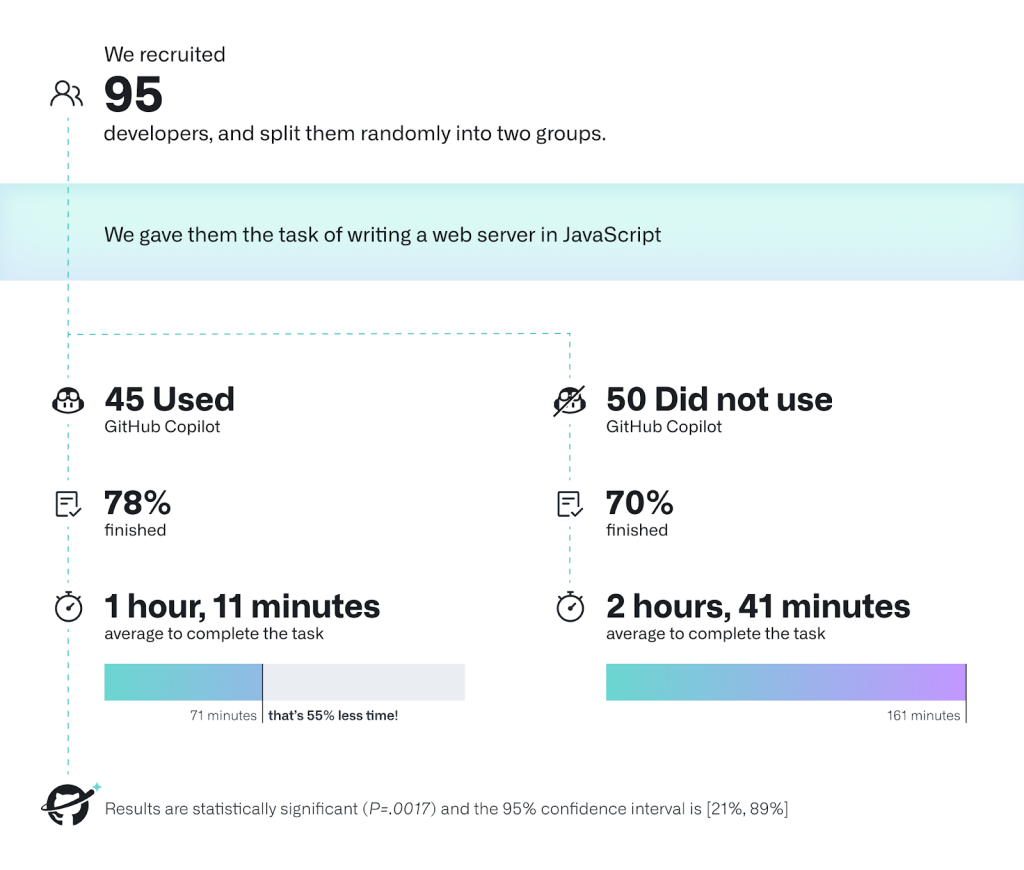

Figure: Summary of the experiment process and results

We recruited 95 professional developers, split them randomly into two groups, and timed how long it took them to write an HTTP server in JavaScript. One group used GitHub Copilot to complete the task, and the other one didn’t. We tried to control as many factors as we could–all developers were already familiar with JavaScript, we gave everyone the same instructions, and we leveraged GitHub Classroom to automatically score submissions for correctness and completeness with a test suite. We’re sharing a behind-the-scenes blog post soon about how we set up our experiment!

In the experiment, we measured—on average—how successful each group was in completing the task and how long each group took to finish.

- The group that used GitHub Copilot had a higher rate of completing the task (78%, compared to 70% in the group without Copilot).

- The striking difference was that developers who used GitHub Copilot completed the task significantly faster–55% faster than the developers who didn’t use GitHub Copilot. Specifically, the developers using GitHub Copilot took on average 1 hour and 11 minutes to complete the task, while the developers who didn’t use GitHub Copilot took on average 2 hours and 41 minutes. These results are statistically significant (P=.0017) and the 95% confidence interval for the percentage speed gain is [21%, 89%].

There’s more to uncover! We’re conducting more experiments and a more thorough analysis of the experiment data we already collected—looking into heterogeneous effects, or potential effects on the quality of code—and we are planning further academic publications to share our findings.

What do these findings mean for developers?

We’re here to support developers while they build software—that includes working more efficiently and finding more satisfaction in their work. In our research, we saw that GitHub Copilot supports faster completion times, conserves developers’ mental energy, helps them focus on more satisfying work, and ultimately find more fun in the coding they do.

We’re also hearing that these benefits are becoming material to engineering leaders in companies that ran early trials with GitHub Copilot. When they consider how to keep their engineers healthy and productive, they are thinking through the same lens of holistic developer wellbeing and promoting the use of tools that bring delight.

The engineers’ satisfaction with doing edgy things and us giving them edgy tools is a factor for me. Copilot makes things more exciting.

With the advent of GitHub Copilot, we’re not alone in exploring the impact of AI-powered code completion tools! In the realm of productivity, we recently saw an evaluation with 24 students, and Google’s internal assessment of ML-enhanced code completion. More broadly, the research community is trying to understand GitHub Copilot’s implications in a number of contexts: education, security, labor market, as well as developer practices and behaviors. We are all currently learning by trying GitHub Copilot in a variety of settings. This is an evolving field, and we’re excited for the findings that the research community — including us — will uncover in the months to come.

Acknowledgements

We are very grateful to all the developers who participated in the survey and experiments–we would be in the dark without your input! GitHub Next conducted the experiment in partnership with the Microsoft Office of the Chief Economist, and specifically in collaboration with Sida Peng and Aadharsh Kannan.

Tags:

Written by

Related posts

From pair to peer programmer: Our vision for agentic workflows in GitHub Copilot

AI agents in GitHub Copilot don’t just assist developers but actively solve problems through multi-step reasoning and execution. Here’s what that means.

GitHub Availability Report: May 2025

In May, we experienced three incidents that resulted in degraded performance across GitHub services.

GitHub Universe 2025: Here’s what’s in store at this year’s developer wonderland

Sharpen your skills, test out new tools, and connect with people who build like you.