How to build an enterprise LLM application: Lessons from GitHub Copilot

The team behind GitHub Copilot shares its lessons for building an LLM app that delivers value to both individuals and enterprise users at scale.

| If you want to build and scale an application using a large language model (LLM), this article’s for you. |

It took us three years to develop GitHub Copilot before we officially launched it to the general public. To go from idea to production, we followed three stages—find it, nail it, scale it—loosely based on the “Nail It, Then Scale It” framework for entrepreneurial product development.

Here’s how it breaks down:

- Find it: Identify an impactful problem space for your LLM application

- Nail it: Create a smooth AI product experience

- Scale it: Get your LLM application ready and useable for general availability (GA)

Let’s get started.

Find it: Isolate the problem you want to solve

Sometimes the hardest part about creating a solution is scoping down a problem space. The problem should be focused enough to quickly deliver impact, but also big enough that the right solution will wow users. Additionally, you want to find a problem where the use of an LLM is the right solution (and isn’t integrated to just drive product engagement).

- Get clear on who you want to help. We saw that AI could drive efficiency, so we wanted to prioritize helping developers who were consistently crunched for time, enabling them to write code faster with less context switching.

-

Focus on a single problem, first. Rather than trying to address all developer problems with AI, we focused on one part of the software development lifecycle: coding functions in the IDE. At the time, most AI coding assistants could only complete a single line of code.

-

Balance product ambition with quality. While the GitHub Copilot team initially explored generating entire commits, the state of LLMs couldn’t support that function at a high enough quality at the time. Through additional testing, the team landed on code suggestions at the “whole function” level.

- Meet people where they are. When it comes to designing products for developers, an LLM app should amplify an existing tool or integrate into an existing workflow. A mantra of the GitHub Copilot team was, “It’s a bug if you have to change the way you code when using GitHub Copilot.” In practice, this means enabling developers to receive code suggestions without changing how they work.

Nail it: Iterate to create a smooth AI product experience

Product development with emerging tech, like generative AI, is often more of a winding path and a linear journey because so much is unknown and rapid advancements in the field can quickly open new doors. Building quick iteration cycles into the product development process allows teams to fail and learn fast. At GitHub, the main mechanism for us to quickly iterate is an A/B experimental platform.

According to Idan Gazit, Senior Director of Research for GitHub Next, “We have to design apps not only for models whose outputs need evaluation by humans, but also for humans who are learning how to interact with AI.”

- Put yourself in users’ shoes. GitHub employees have a culture of putting themselves in the shoes of their end users by “dogfooding” products before—and after—they’re released. In practice, this meant the GitHub Copilot team stood up a simple web interface where it could tinker with foundation models and explore ways to leverage those models in their own developer workflows.

We quickly found that a web interface was not the right canvas since it meant developers had to switch back and forth between their editor and the web browser. As a result, the team decided to focus on bringing GitHub Copilot to the IDE and making the AI capability modeless—or working in the background.

The developers on our team also noticed they often referenced multiple open tabs in the IDE while coding. This insight led them to experiment with a technique called neighboring tabs, where GitHub Copilot processes multiple files open in a developer’s IDE instead of just the single one the developer is working on. Neighboring tabs helped to increase the acceptance rates of GitHub Copilot’s suggestions by 5%.

-

Evaluate your testing tools. As our experiment continued, we had to scale our internal testing tools to be more versatile and powerful. While we initially relied on our own tools for testing, we ultimately switched to the Microsoft Experimentation Platform to optimize functionality based on the feedback and interaction at scale.

-

Avoid the sunk cost fallacy. This is when you’re reluctant to abandon a course of action because you’ve heavily invested in it—even when it’s clear switching gears would be more beneficial.

The GitHub and OpenAI teams initially believed every coding language would require its own fine-tuned AI model. But the field of generative AI was rapidly advancing, and our assumption turned out to be incorrect. In the end, OpenAI’s LLMs significantly improved and one model could handle a wide variety of coding languages and tasks.

-

Make a habit of revisiting old ideas. In a field that’s rapidly advancing, the functions that aren’t feasible with today’s LLMs might be possible with tomorrow’s.

In the beginning, we explored a chat interface for developers to ask coding questions. However, in early testing, users had much higher expectations for the capabilities and quality of coding suggestions than GitHub Copilot could deliver at the time. As a result, we deprioritized the feature. But as customers became familiar with AI chatbot following the emergence of ChatGPT and LLMs continued to evolve, an iterative chat experience, like GitHub Copilot Chat, became possible.

Scale it: Optimize quality, usability, and responsible use of AI to get to GA

Early feedback and technical previews are key to driving product improvements and getting your application to GA. Below you’ll find the steps we took before launching the GitHub Copilot technical preview, how we managed the technical preview and optimized user feedback, and how we prepared our internal infrastructure to handle demand at scale.

Optimize quality and usability

- Ensure consistent results. Because LMMs are probabilistic—meaning they don’t always produce the same, predictable outcomes—experimentation with them needs to be statistically based. One solution involves setting up a quality pipeline that addresses this unique challenge of building with LLMs.

For instance, when the GitHub Copilot team first decided to provide whole function coding suggestions, we also had to ensure output predictability and consistency, where the same prompt and context would produce the same suggestions from the AI model.

To achieve this, the team applied two strategies: changing the parameters to reduce the randomness of outputs and caching responses. Additionally, using cached responses instead of generating new responses to the same prompt not only reduced variability in suggestions, but it also improved performance.

-

Implement a waitlist for your technical preview. A waitlist allowed the GitHub Copilot team to manage questions, feedback, and comments—and ensure we could address them effectively. This approach also helped ensure we had a diverse set of early adopters across developers of varying experience levels to provide feedback.

- Take advantage of real user feedback. In one example, developers shared that an update had negatively affected the quality of the model’s coding suggestions. In response, the GitHub Copilot team implemented a new guardrail metric—the percentage of suggestions that are multi-line vs. single line—and tuned the model to ensure customers continued to get high-quality suggestions.

While the GitHub team actively dogfooded GitHub Copilot to understand what the experience was like for developers, we also benefited from developers outside GitHub adding diverse feedback across real-world use cases. The GitHub Copilot team engaged and interacted with technical preview users early, often, and on the users’ preferred platforms. This allowed us to actively respond to issues and feedback in real time.

-

Commit to iterating as you scale. When GitHub Copilot became generally available, the team not only had to improve the product, but also its infrastructure. When we experimented with and quickly iterated GitHub Copilot, it worked directly with the OpenAI API. As the product grew, we scaled our use of Microsoft Azure’s infrastructure to ensure GitHub Copilot had the quality, reliability, and responsible guardrails of a large-scale, enterprise-grade product.

-

Define the product’s key performance metrics. To optimize GitHub Copilot, we used early developer feedback to identify the right performance metrics, such as code acceptance rate and, eventually, code retention rate (which measures how much of the original code suggestion is kept or edited by a developer).

-

Optimize costs. The team worked to optimize the costs of delivering GitHub Copilot suggestions while balancing developer impact. For instance, before we decided on using ghost text—the gray text that flashes one coding suggestion while you type—the tool would eagerly generate 10 suggestions and display them all at once. This incurred upfront compute costs for suggestions two through 10, when most people choose the first one. But it also created a user experience cost, because those 10 suggestions pulled developers out of their workflow and into an evaluation mindset. “It was like paying to calculate the results that appear on the second page of a search engine—and making that second page grab your attention—even though most folks end up using the top result,” Gazit says.

Optimizing costs is an ongoing project, and we’re exploring new ideas to reduce costs while improving the user experience.

Optimize responsible use of AI

-

Prioritize security and trust. Feedback during GitHub Copilot’s technical preview reinforced the importance of suggesting code that is secure. In response, the team integrated code security capabilities to filter out suggestions that could contain security vulnerabilities (e.g., SQL injections and hard coded credentials) and used natural language filters from Azure OpenAI Service to filter out offensive content.*

-

Allow your community to help you. At GitHub, we deeply valued our extensive developer community for feedback on our products and collaborating with them to improve our offerings. With GitHub Copilot, our developer community was critical to understanding the potential around AI—and some concerns, too.

For instance, the developer community was concerned that GitHub Copilot suggestions might match public code. In response, the GitHub Copilot team created a filter to block suggestions matching public source code in GitHub public repositories that were longer than 150 characters.

Based on community input, the GitHub Copilot team also developed a code reference tool that includes links to public code that may match GitHub Copilot suggestions, so developers can review potential matches (and relevant licensing information), and make informed choices.

Develop a go-to-market strategy

-

Launch your product with product evangelists. Before launching the technical preview of GitHub Copilot in 2021, the team presented the prototype to influential members of the software developer community and GitHub Stars. This allowed us to launch the technical preview with an existing base of support and extend the preview’s reach to a broader range of users.

-

Get your product in front of individual users before going after businesses. The team decided to first sell licenses directly to developers, who would clearly benefit from an AI coding assistant. We paired this approach with a free trial program and monthly pricing, based on user survey findings that individuals prefer a simple and predictable subscription. Gaining traction among individual users helped to build a foundation of support and drive adoption at the enterprise level.

Key takeaways

We’re still in the early days of generative AI, so we’re keeping close tabs on the demand and need for this new technology. While each company and product will need to define its own approach to building an LLM app, here are some key learnings from our product journey with GitHub Copilot:

- Identify a focused problem and thoughtfully discern an AI’s use cases. This will ensure your app has greater impact and a faster time-to-market.

-

Integrate experimentation and tight feedback loops into the design process. This is especially critical when working with LLMs, where outputs are probabilistic and most end users are just learning how to interact with AI models.

-

As you scale, continue to leverage user feedback and prioritize user needs. Doing so will ensure that your product is built to deliver consistent results and real value.

If you’re looking for a problem to solve with an LLM app, check out our post on how companies are boosting productivity with generative AI. You can also take lessons from how GitHub used GitHub Actions to help an AI nonprofit, Ersilia, disseminate AI models to advance pharmaceutical research in low- and middle-income countries.

Tags:

Written by

Related posts

How AI is reshaping developer choice (and Octoverse data proves it)

AI is rewiring developer preferences through convenience loops. Octoverse 2025 reveals how AI compatibility is becoming the new standard for technology choice.

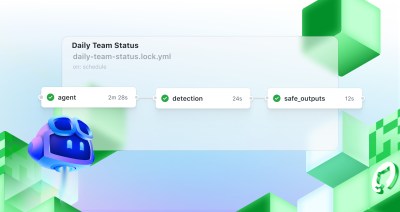

Automate repository tasks with GitHub Agentic Workflows

Discover GitHub Agentic Workflows, now in technical preview. Build automations using coding agents in GitHub Actions to handle triage, documentation, code quality, and more.

Continuous AI in practice: What developers can automate today with agentic CI

Think of Continuous AI as background agents that operate in your repository for tasks that require reasoning.