Surviving the SSHpocolypse

Over the past few days, we have had some issues with our SSH infrastructure affecting a small number of Git SSH operations. We apologize for the inconvenience, and are happy…

Over the past few days, we have had some issues with our SSH infrastructure affecting a small number of Git SSH operations. We apologize for the inconvenience, and are happy to report that we’ve completed one round of architectural changes in order to make sure our SSH servers keep their sparkle.

As we’ve said before, we use GitHub to build GitHub, so the recent intermittent SSH connection failures have been affecting us as well.

Before today, every Git operation over SSH would open its own connection to our MySQL database during the authentication step. In the past this wasn’t a problem, however, we’ve started seeing sporadic issues as our SSH traffic has grown.

Realizing we were potentially on the cusp of a more serious situation, we patched our SSH servers to increase timeouts, retry connections to the database, and verbosely log failures. After this initial pass of incremental changes aimed to pinpoint the source of the problem, we realized this piece of our infrastructure wasn’t as easily modified as we would have liked. We decided to take a more drastic approach.

Starting on Tuesday, I worked with @jnewland to retire our 4+ year-old SSH patches and rewrite them all from scratch. Rather than opening a database connection for each SSH client, we call out to a shared library plugin (written in C) that lives in our Rails app. The library uses an HTTP endpoint exposed by our Rails app in order to check for authorized public keys. The Rails app is backed by a web server with persistent database connections, which keeps us from creating unbounded database connections, as we were doing previously. This is pretty neat because, like all code that lives in the GitHub Rails app, we can redeploy it near-instantly at any time. This gives us tremendous flexibility in continuing to scale our SSH services.

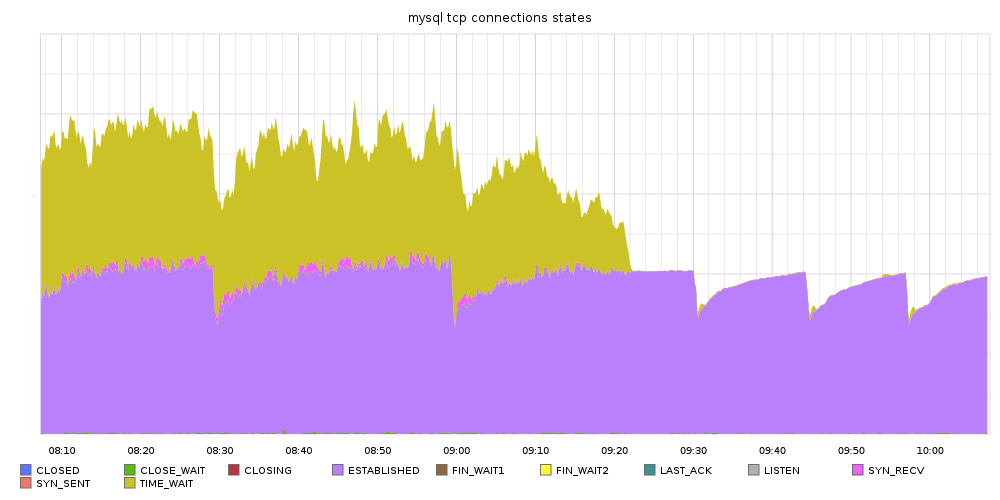

@jnewland deployed the changes around 9:20am Thursday and things seem to be in much better shape now. Below is a graph that shows connections to the mysql database. You can see a drastic reduction in the number of database connections:

You can also observe an overall smaller number of SSH server processes (they’re not all stuck because of contention on the database server anymore):

![]()

Of course, we are also exploring additional scalability improvements in this area.

Anywho, sorry for the mess. As always, please ping our support team if you see any further issues on github.com where Git over SSH hangs up randomly.

Written by

Related posts

From pair to peer programmer: Our vision for agentic workflows in GitHub Copilot

AI agents in GitHub Copilot don’t just assist developers but actively solve problems through multi-step reasoning and execution. Here’s what that means.

GitHub Availability Report: May 2025

In May, we experienced three incidents that resulted in degraded performance across GitHub services.

GitHub Universe 2025: Here’s what’s in store at this year’s developer wonderland

Sharpen your skills, test out new tools, and connect with people who build like you.