Introducing AI-powered application security testing with GitHub Advanced Security

Learn about how GitHub Advanced Security’s new AI-powered features can help you secure your code more efficiently than ever.

At GitHub, we’re focused on reducing workflow friction. Nowhere is this more important than when it comes to security. Developers need the ability to proactively secure their code right where it’s created—instead of testing for and remediating vulnerabilities after the fact. Embedded security is critical to delivering secure applications.

Over the past year, GitHub Advanced Security released 70+ features to improve your application security testing and software supply chain capabilities. Dependabot now can group multiple version updates in a single pull request, code scanning can perform variant analysis on up to 1,000 repositories with a single query, and secret scanning now has validity checks, in addition to being free for all public repositories.

And with AI, we have the potential to revolutionize how developers build secure applications from the get-go, and radically transform the traditional definition of “shift left.” Today, we’re excited to announce previews for three AI-powered features within GitHub Advanced Security, along with robust improvements for our security overview capability.

Fix issues faster with code scanning autofix

Code scanning, powered by our static analysis engine CodeQL, now includes an autofix capability designed to help developers achieve faster fix times, leading to increased productivity, less technical security debt, and more secure code.

With this new feature, you’ll receive AI-generated fixes for CodeQL JavaScript and TypeScript alerts right in your pull requests. These are not just any fixes, but precise, actionable suggestions that will allow you to quickly understand what the vulnerability is and how to remediate it. You can instantly commit these fixes to your code, helping you resolve issues faster and preventing new vulnerabilities from creeping into your codebases. So, how does this work?

After the CodeQL analysis, GitHub seamlessly queries an advanced LLM for fixes to any new alerts. These AI-generated remediation suggestions are posted as code suggestions on the ‘Conversation’ and ‘Files Changed’ tabs of the pull request. You can then view and accept these recommended changes, or even edit the suggested fixes before merging them into your codebase.

The beauty of this feature is that it provides a frictionless remediation experience, as users can rapidly fix vulnerabilities while they code, resulting in an even faster time to remediation—with all the same accuracy that code scanning users have come to expect.

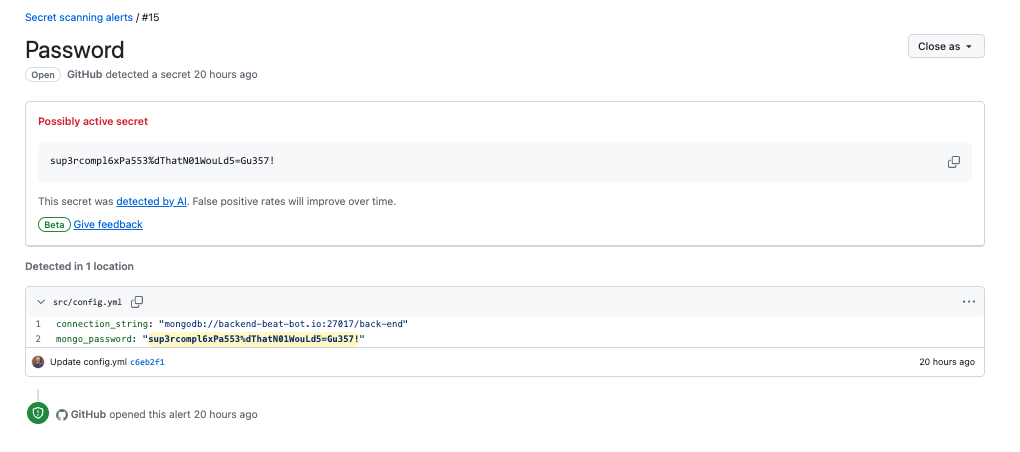

Detect leaked passwords with secret scanning

Passwords are a challenging category within secret detection. They are commonly leaked, and as a result, are a popular target of malicious attackers seeking to gain unauthorized access. Unfortunately, passwords are difficult to detect by secret detection solutions because they don’t follow a specific pattern. The latest generation of LLMs presents an opportunity to find these passwords with lower false positives than traditional methods.

Currently in limited public beta, secret scanning now leverages the power of AI to detect generic or unstructured secrets in your code.

Findings will be displayed in a separate tab called “Other,” alongside additional low confidence patterns. Here, security managers and repository owners can view alerts about a possibly active leaked password— while still focusing on remediating the highest-confidence findings in the existing UX. If they determine that the alert is legitimate, they can work with the developer to resolve the issue.

Save time with regular expression generator for custom patterns

While GitHub’s secret scanning partner program is robust, boasting 180 partners and over 225 supported patterns, users may still need to create additional custom patterns to detect secret types unique to their organization. Like learning a mini-coding language, writing regular expressions can be difficult because of the many parameters and nuances involved.

To make it easier and faster for you to create and update custom patterns, GitHub now includes an AI-powered experience for authoring custom patterns. Through this form-based experience, all you have to do is answer a few simple questions to auto-generate custom patterns in the form of regular expressions. This new feature enables you to execute dry runs in real time to ensure proper scanning before saving the newly created pattern.

These updates are not just incredible time savers, but they will ultimately help you get the coverage you need to make sure your secrets are secure.

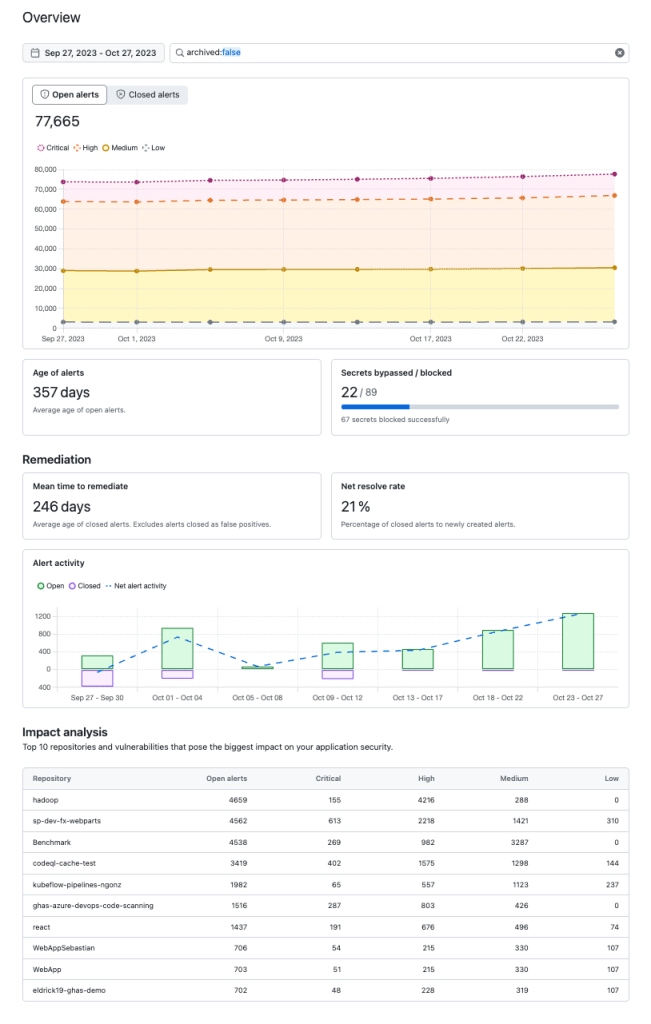

View more crucial data with the new security overview dashboard

While GitHub Advanced Security is tailored for developers, every successful security program is a collaboration between developers and security teams. Now, with security overview’s new dashboard, security managers and administrators will have access to a new dashboard that brings historical trend analysis to your security alerts on GitHub.

With this dashboard, you’ll be able to understand your organization’s holistic security posture through the lens of risks, remediations, and prevention:

- Risk. Demonstrates the security findings across your repositories, as well as where there are increases or decreases in findings, and the categories of findings.

- Remediation. Helps you understand how effective your remediation practices are. For example, how many security findings have been closed and your organization’s overall mean time to remediation.

- Prevention. Offers a clear view into where security issues have been prevented through features like push protection.

These tiles will dynamically change as you filter the data by date range, repository, and other criteria, empowering you to get answers to the most pressing questions you have about your AppSec program faster than ever before.

Better security, more happiness

Today’s announcements will help your developers and security teams more effectively collaborate on finding and fixing security issues where they already work, inside GitHub. We’re thrilled to harness the power of AI to improve the relevance of alerts, speed up remediation, and improve the administrative experience—with the ultimate goal of making your teams happier and more productive, and your code more secure.

Tags:

Written by

Related posts

From pair to peer programmer: Our vision for agentic workflows in GitHub Copilot

AI agents in GitHub Copilot don’t just assist developers but actively solve problems through multi-step reasoning and execution. Here’s what that means.

GitHub Availability Report: May 2025

In May, we experienced three incidents that resulted in degraded performance across GitHub services.

GitHub Universe 2025: Here’s what’s in store at this year’s developer wonderland

Sharpen your skills, test out new tools, and connect with people who build like you.