Evolution of GitHub’s data centers

Over the past 18 months we’ve made a significant investment in GitHub’s physical infrastructure. The goal of this work is to improve the redundancy and global availability of our system.…

Over the past 18 months we’ve made a significant investment in GitHub’s physical infrastructure. The goal of this work is to improve the redundancy and global availability of our system. In doing so we’ve solidified the foundation upon which we will expand our compute and storage footprint in support of our growing user base.

Overview

We’ve got four facilities, two of which are transit hotels which we call points of presence (POPs) and two of which are data centers. To give an idea of our scale, we’ve got petabytes of Git data stored for users of GitHub.com and do around 100Gb/s across transit, internet exchanges, and private network interfaces in order to serve thousands of requests per second. Our network and facilities are built using a hub and spoke design. We operate our own backbone between Seattle and northern Virginia POPs which provides more consistent latencies and throughput via protected fiber.

The POPs are a few cabinets primarily composed of networking equipment. They’re placed in facilities with a high concentration of transit providers and access to regional internet exchanges. Those facilities don’t store customer data, rather they’re focused on internet and backbone connectivity as well as direct connect and private network interfaces to Amazon Web Services. Today we have a POP in northern Virginia and one in the Seattle metro area, each of which are independently connected to transit providers and the data center in their respective geographic region.

Those well connected POPs have some downsides though, and those led us to choose to have separate data centers for storing customer data and serving requests. For one, it’s more difficult to get space in the facilities we use as POPs because of the demand for space with a variety of transit options. Because of that demand for space there is often less power available which prevents us from being able to deploy the high-density storage and compute cabinets we favor. Therefore the data centers are in standalone cages in less well connected facilities and we use metro waves between the POPs and data centers along with passive dense wavelength division multiplexing (DWDM) to connect them. Today the POPs are one-to-one with data centers, so we have a data center on each coast of the continental United States.

Inside the facilities

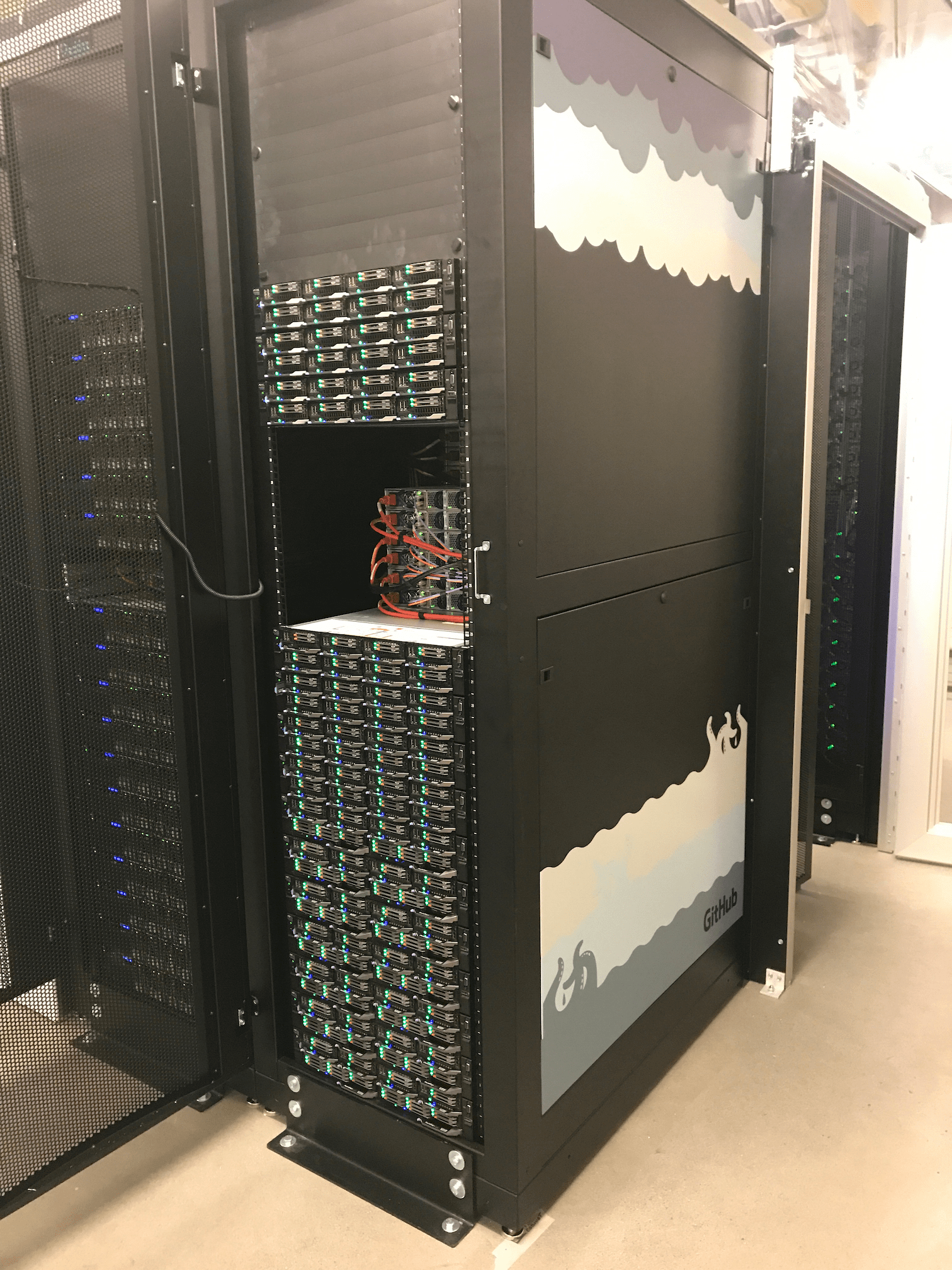

Although the way cages in POPs and data centers are laid out and deployed is somewhat different, there is still a set of shared principles about how we build our facilities. We aim to have cabinet types be as homogenous as possible to increase repeatability. There is one type of cabinet in the POPs which includes management and production border routers, optical networking gear, and enough compute hardware to run core services. Our data centers have three different types of cabinets — networking, compute, and storage. The majority of the cabinets are for compute and storage hardware, each of which are in specific sets of cabinets rather than mixing them to allow the tiers to be grown independently. The other thing we focus on across both the POPs and data centers is having a clear separation between our infrastructure and things like cross connects run by data center providers. In the POPs we use pre-labeled and cabled points of demarcation to make adding new transit, transport, or direct connect providers a matter of interacting with a single side of one patch panel.

The data centers are built to simplify adding new capacity through the use of structured cabling. When the west coast data center was built the structured cabling was deployed for the whole space, including cabinet positions not yet in use. Each storage and compute cabinet is connected to the structured cabling via an overhead patch panel. Each cabinet-side patch panel is connected to a pair of matching panels in a set of two-post racks that reside between a pair of networking cabinets. Connecting a cabinet to the spine and aggregation switches in the networking cabinets is a matter of patching a set of links from leaf switches and the console device to the overhead panel then patching from the two-post racks to networking cabinets. The two-post to networking patching is done using pre-labeled and tapered fiber looms, which help keep the network cabinets tidy despite the high density of fiber that lands in them.

Expanding compute and storage

We work closely with a cabinet integrator to handle rack and stack, cabling, validation, and logistics. When a project to expand compute and/or storage capacity in one of our data centers gets kicked off, the first step is to either use an existing cabinet configuration we’ve built with them or design a new one. Unless we are deploying a new type of cabinet or making changes to configuration of chassis and sleds in an existing one this process involves getting quotes and lead times from vendors, ensuring the cabinet layouts match what we expect, and then placing an order. Once all the components are sourced, the rack integrators build the cabinets and ultimately pack them into a custom shipping crate suitable for freight shipment.

Upon arrival at one of our facilities the cabinet gets landed in its target location in the data center. Network configurations are pre-generated and are already on the switches by the time the cabinets leave the build site, so all that’s required to turn up a new set of cabinets is patching each one, landing power circuits, and powering up the hardware inside them. Data about the cabinet layout from the integrator is imported into our hardware management system, gPanel, to map serial numbers to rack units and enclosures. gPanel provides hardware discovery via a stateless image that’s run across all chassis that aren’t currently installed. On first boot each chassis enters an unknown state and then moves through a state machine to configure devices like the iDRAC, make sure the BMC, BIOS, and drive firmwares match the versions we run in production elsewhere, and then validate the health of the machine. Once each of these steps have been completed the box ends up in the ready state and is made available to be requested via gPanel.

The deployment of new storage and compute cabinets is highly reproducible using this approach, allowing us to confidently expand our physical footprint inside the data centers. In its current form the process allows us to make hardware available for engineers across the company to be provisioned the same day the cabinets are patched and powered up.

Here’s a compute cabinet we recently added to our Seattle data center:

Conclusion

The improvements we’ve made to our data centers and network have enabled us to continue to confidently expand our physical infrastructure. Introducing a repeatable process to add new capacity and decoupling the regional network edge from our compute and storage systems has enabled us to enter the next stage of our infrastructure’s growth.

If data center and network engineering excites you, we’re looking to add more SREs to the team.

Written by

Related posts

From pixels to characters: The engineering behind GitHub Copilot CLI’s animated ASCII banner

Learn how GitHub built an accessible, multi-terminal-safe ASCII animation for the Copilot CLI using custom tooling, ANSI color roles, and advanced terminal engineering.

When protections outlive their purpose: A lesson on managing defense systems at scale

User feedback led us to clean up outdated mitigations. See why observability and lifecycle management are critical for defense systems.

Post-quantum security for SSH access on GitHub

GitHub is introducing post-quantum secure key exchange methods for SSH access to better protect Git data in transit.