GitHub Copilot bring your own key (BYOK) enhancements

Bring your own key (BYOK) for GitHub Copilot now supports additional APIs, new configuration options, and more provider integrations.

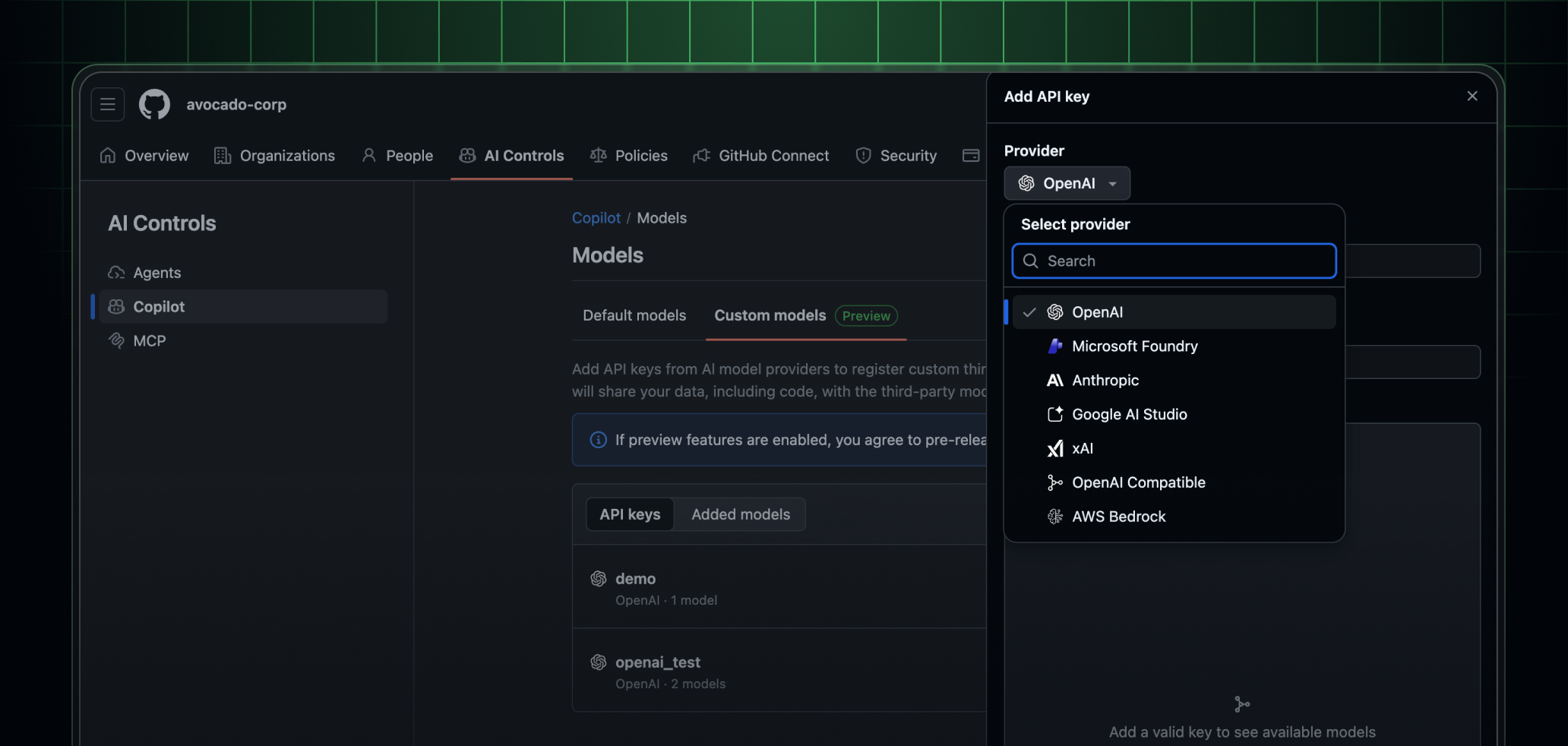

With this update, enterprises can connect models that use the Responses API, configure a maximum context window for model interactions, enable streaming, and choose from an expanded list of supported LLM providers.

What’s new

New provider options

You can now connect API keys from AWS Bedrock, Google AI Studio, and any OpenAI‑compatible provider. These options join Anthropic, Microsoft Foundry, OpenAI, and xAI as supported BYOK choices.

Support for the Responses API

BYOK now supports models that use the Responses API. This enables structured outputs and richer multimodal interactions.

Ability to set a maximum context window

Admins can define the maximum context window for BYOK models, helping balance cost, performance, and response quality.

Streaming responses for faster interaction

Streaming output from connected models lets Copilot display responses as they are generated, rather than waiting for completion.

Start using the new BYOK features today

These new capabilities are available now in public preview for GitHub Enterprise and Business customers. Connect your LLM provider’s API key in your enterprise or organization settings and start using your models in Copilot Chat and supported IDEs. Learn more by visiting our BYOK documentation.

Help us shape the future

We are just getting started and your feedback will guide what comes next. Join the discussion within the GitHub Community to share feedback and connect with other developers.