Parth Sehgal

Parth Sehgal is a senior software engineer at GitHub who works on the Secret Protection team.

Learn how the Secret Protection engineering team collaborated with GitHub Copilot coding agent to expand validity check coverage.

Accidentally committing secrets to source code is a mistake every developer dreads — and one that’s surprisingly easy to make. GitHub Secret Protection was built for moments like these, helping teams catch exposed credentials before they cause harm.

Secret Protection works by creating alerts for sensitive credentials found in code, and it offers several features to help mitigate leaks even further.

Aaron and I have worked extensively on validity checks during our time at GitHub. It’s become a core part of our product, and many users rely on it day-to-day as part of their triage and remediation workflows. Secret Protection calculates the validity of a leaked credential by testing it against an unobtrusive API endpoint associated with the token’s provider.

We released this feature in 2023, and we started by adding validity checks support for the most common token types we saw leaked in code (e.g., AWS keys, GCP credentials, Slack tokens). Secret Protection got to a point where it was validating roughly 80% of newly created alerts. While the less common token types remained (and continue to remain) important, our team shifted focus to make sure we delivered the greatest value for our customers.

Towards the end of 2024 and into 2025 we gradually saw the advent of agentic AI, and soon coding agents started to gain mainstream popularity. Our team got together earlier this year and had a thought: Could we successfully use coding agents to help cover this gap?

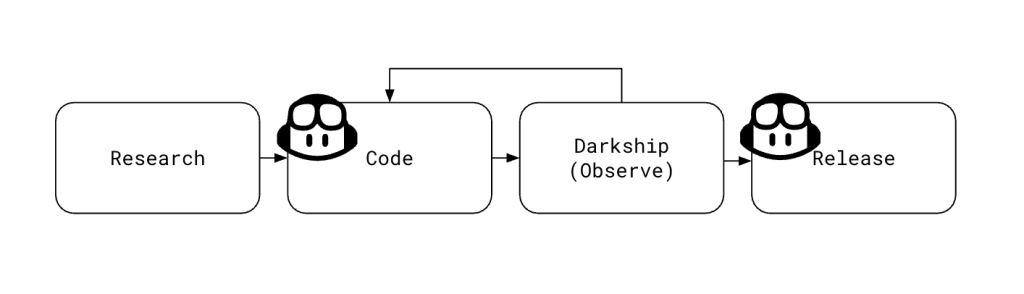

To identify opportunities for automation, we first took a close look at our existing process for adding validation support for new token types. This framework-driven workflow included the following steps for each token type:

The coding and release parts (second and fourth steps) of this process were the obvious first choices for automation.

The first step above involves finding a suitable endpoint to validate a new token type. We typically use /me (or equivalent) endpoints if they exist. Sometimes they do exist, but they’re buried in documentation and not easy to find. We experimented with handing off this research to Copilot, but it sometimes struggled. It could not reliably find the same least-intrusive endpoint an engineer would choose. We also discovered that creating and testing live tokens, and interpreting nuanced API changes, remained tasks best handled by experienced engineers.

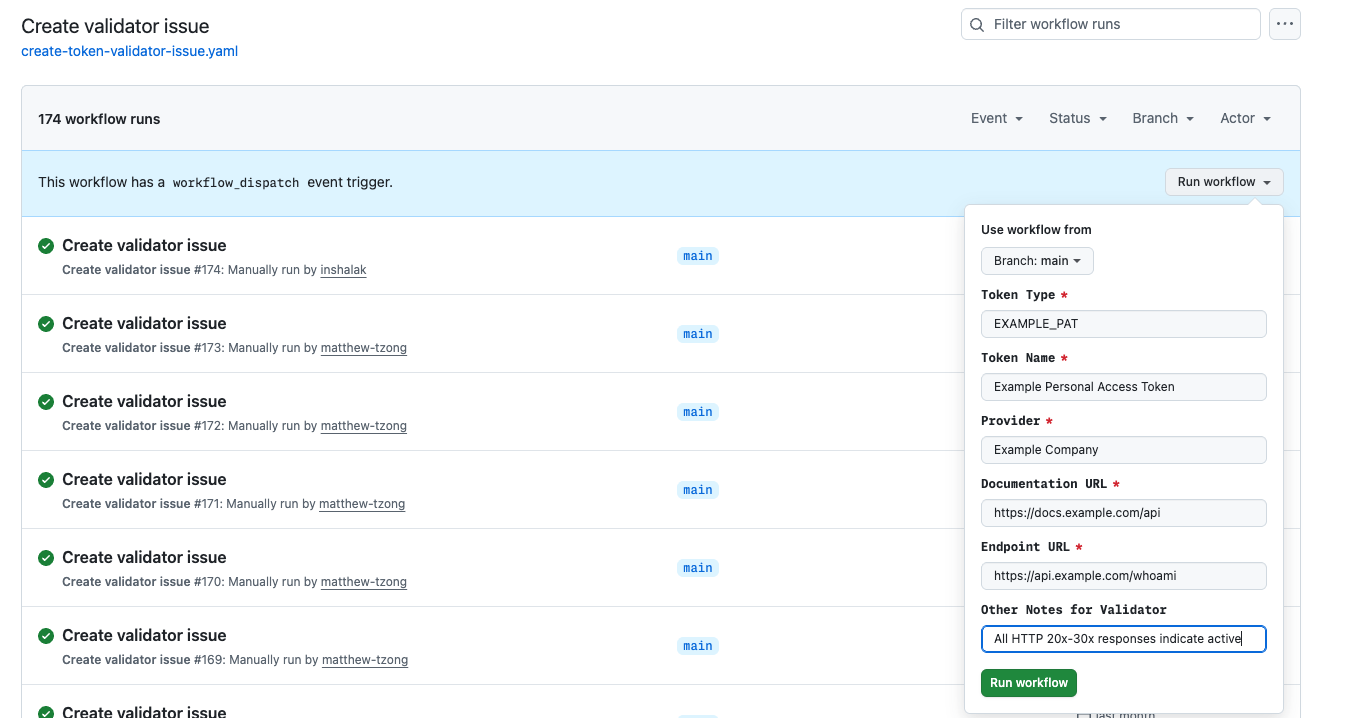

Copilot did an excellent job of making code changes. The output of the human-driven research task was fed into a manually dispatched GitHub workflow that created a detailed issue we could assign to the coding agent. The issue served as a comprehensive prompt that included background on the project, links to API documentation, and various examples to look at. We learned that the coding agent sometimes struggled with following links, so we added an extra field for any additional notes.

After assigning an issue to Copilot, the coding agent automatically generated a pull request, instantly turning our research and planning into actionable, feedback-ready code. We treated code generated by the agent just like code written by our team: it went through automated testing, a human review process, and was eventually deployed by engineers. GitHub provided a streamlined process for requesting changes from the agent — just add comments to a pull request. The agent is not perfect, and it did make some mistakes. For example, we expected that Copilot would follow documentation links in a prompt and reference the information there as it implemented its change, but in practice we found that it sometimes missed details or didn’t follow documentation as intended.

Our framework included the ability to darkship a validator. That is, we observed the results of our new code without writing validity inferences to the database. It wasn’t uncommon for our engineers to encounter some amount of drift in API documentation and actual behavior. This stage allowed us to safely fix any errors. When we were ready to fully release a change, we asked Copilot to make a small configuration change to take the new validator out of darkship mode.

Prior to our AI experimentation, progress was steady but slow. We were validating 32 partner token types. It took us several months to get here as engineers balanced onboarding new checks with day-to-day feature development. With Copilot, we onboarded almost 90 new types in just a few weeks as our engineering interns, @inshalak and @matthew-tzong, directed Copilot through this process.

Coding agents are a viable option for accelerating framework-driven repeatable workflows with automation. In our case, Copilot was literally a force multiplier. Being able to parallelize the output of N research tasks over N agents was huge. Copilot delivers speed and scale, but it’s no replacement for human engineering judgment. Always review, test, and verify the code it produces. We were successful by grafting Copilot into very specific parts of this framework.

Our experiment using Copilot coding agent made a measurable impact: we dramatically accelerated our coverage of token types, parallelized the most time-consuming parts of the workflow, and freed up engineers to focus on the nuanced research and review stages. Copilot didn’t replace the need for thoughtful engineering, but it did prove to be a powerful teammate for framework-driven, repeatable engineering tasks.

A few things we learned along the way:

We see huge potential for coding agents wherever there are repeatable engineering tasks. We are experimenting with similar processes in other onboarding workflows in our project. We’re confident that many other teams and projects across the industry have similar framework-driven workflows that are great candidates for this kind of automation.

If you’re looking to bring automation into your own workflow, take advantage of what’s already repeatable, invest in good prompts, and always keep collaboration and review at the center.

Thanks for reading! We’re excited to see how the next generation of agentic AI and coding agents will continue to accelerate software engineering — not just at GitHub, but across the entire developer ecosystem.

A hands-on guide to using GitHub Copilot CLI to move from intent to reviewable changes, and how that work flows naturally into your IDE and GitHub.

GitHub Copilot coding agent now includes a model picker, self-review, built-in security scanning, custom agents, and CLI handoff. Here’s what’s new and how to use it.

Most multi-agent workflow failures come down to missing structure, not model capability. Learn the three engineering patterns that make agent systems reliable.