GitHub Copilot now has a better AI model and new capabilities

We’re launching new improvements to GitHub Copilot to make it more powerful and more responsive for developers.

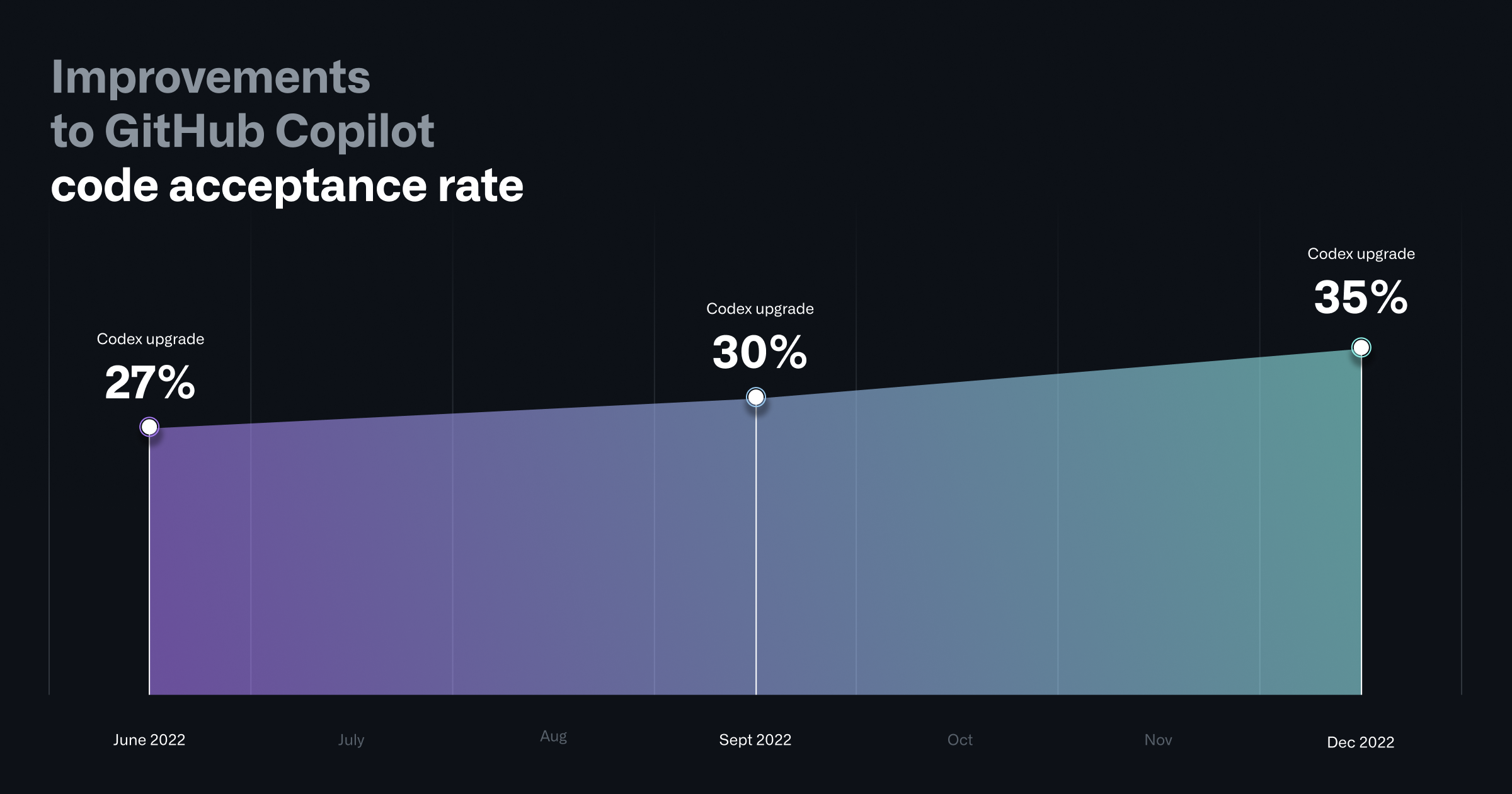

Since we first launched GitHub Copilot, we have worked to improve the quality and responsiveness of its code suggestions by upgrading the underlying Codex model. We also developed a new security vulnerability filter to make GitHub Copilot’s code suggestions more secure and help developers identify insecure coding patterns as they work.

This week, we’re launching new updates across Copilot for Individuals and Copilot for Business—including new simple sign-ups for organizations—to make GitHub Copilot more powerful and more responsive for developers.

Let’s dive in.

A more powerful AI model and better code suggestions

To improve the quality of GitHub Copilot’s code suggestions, we have updated the underlying Codex model resulting in large scale improvements to the quality of code suggestions and reduction of time to serve those suggestions to the users.

Case in point: When we first launched GitHub Copilot for Individuals in June 2022, more than 27% of developers’ code files on average were generated by GitHub Copilot. Today, GitHub Copilot is behind an average of 46% of a developers’ code across all programming languages—and in Java, that number jumps to 61%.

This work means that developers using GitHub Copilot are now coding faster than before thanks to more accurate and more responsive code suggestions.

Here are the key technical improvements we made to achieve this:

- An upgraded AI Codex model: We upgraded GitHub Copilot to a new OpenAI Codex model, which delivers better results for code synthesis.

-

Better context understanding: We improved GitHub Copilot by a new paradigm called Fill-In-the-Middle (FIM)—which offers developers better craft prompts for code suggestions. Instead of only considering the prefix of the code, it also leverages known code suffixes and leaves a gap in the middle for GitHub Copilot to fill. This way, it now has more context about your intended code and how it should align with the rest of your program. FIM in GitHub Copilot consistently produces higher quality code suggestions, and we’ve developed various strategies to deliver it without any added latency.

-

A lightweight client-side model: We updated the GitHub Copilot extension for VS Code with a lightweight client-side model that improved overall acceptance rates for code suggestions. To do this, GitHub Copilot now uses basic information about the user’s context—for example, whether the last suggestion was accepted—to reduce the frequency of unwanted suggestions when they might prove disruptive to a developer’s workflow. This resulted in a 4.5% reduction in unwanted suggestions, helping GitHub Copilot better respond to each developer using it. And with a second, improved iteration of this client-side model that we shipped in January 2023, we’ve seen even better improvements in overall code acceptance rates.

Filtering out security vulnerabilities with a new AI system

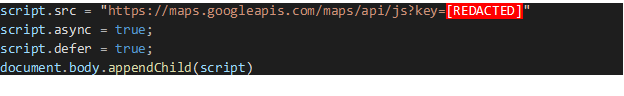

We also launched an AI-based vulnerability prevention system that blocks insecure coding patterns in real-time to make GitHub Copilot suggestions more secure. Our model targets the most common vulnerable coding patterns, including hardcoded credentials, SQL injections, and path injections.

The new system leverages LLMs to approximate the behavior of static analysis tools—and since GitHub Copilot runs advanced AI models on powerful compute resources, it’s incredibly fast and can even detect vulnerable patterns in incomplete fragments of code. This means insecure coding patterns are quickly blocked and replaced by alternative suggestions.

Here is an example of a vulnerable pattern generated by the language model:

Note: GitHub Copilot can generate novel strings for forms of identification like keys or passwords by mimicking patterns seen in data. The examples below do not necessarily show existing or usable credentials. Regardless, showing these credentials within code is an unsafe coding pattern which we address with our solution.

This application of AI is fundamentally changing how we can prevent vulnerabilities from entering our code.

With GitHub Copilot, we’re enabling developers to reach that magical flow state—which includes delivering fast, accurate vulnerability prevention right from the editor. This, combined with GitHub Advanced Security’s vulnerability detection and remediation capabilities, provides an end-to-end seamless experience for developers to secure their code.

The prevention mechanism is the first critical step in helping developers build more secure code with GitHub Copilot. We’ll continue to teach our LLMs to distinguish between vulnerable and non-vulnerable code patterns. You can help us improve GitHub Copilot by reporting any vulnerable patterns you identify in code suggestions to copilot-safety@github.com.

Start building with GitHub Copilot

An upgraded AI model, better code suggestions, improved responsiveness, and heightened security—all of these improvements are now available to developers using Copilot for Individuals and Copilot for Business. As we move forward, we will continue to improve the developer experience for people using GitHub Copilot, and these updates are just the beginning.

Tags:

Written by

Related posts

Join or host a GitHub Copilot Dev Days event near you

GitHub Copilot Dev Days is a global series of hands-on, in-person, community-led events designed to help developers explore real-world, AI-assisted coding.

From idea to pull request: A practical guide to building with GitHub Copilot CLI

A hands-on guide to using GitHub Copilot CLI to move from intent to reviewable changes, and how that work flows naturally into your IDE and GitHub.

What’s new with GitHub Copilot coding agent

GitHub Copilot coding agent now includes a model picker, self-review, built-in security scanning, custom agents, and CLI handoff. Here’s what’s new and how to use it.