Kevin Merchant

Staff Manager, Applied Science, GitHub Code|AI. I lead an applied research team to fine-tune custom models for GitHub Copilot and develop agentic tools and evaluation pipelines.

GitHub Copilot’s next edit suggestions just got faster, smarter, and more precise thanks to new data pipelines, reinforcement learning, and continuous model updates built for in-editor workflows.

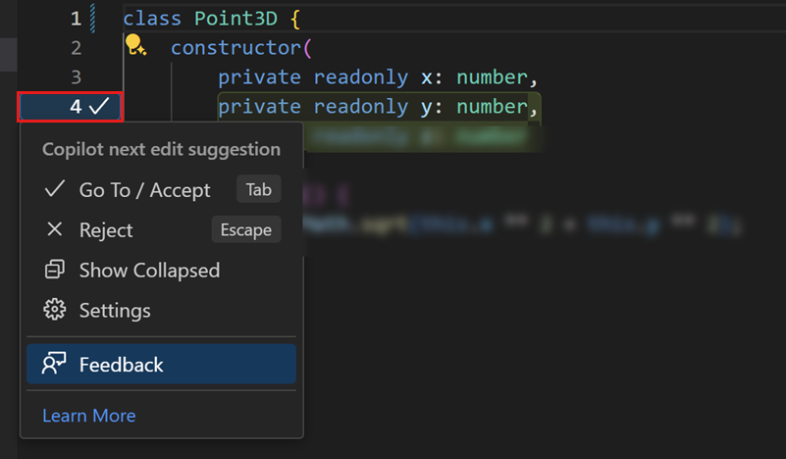

Editing code often involves a series of small but necessary changes ranging from refactors to fixes to cleanup and edge-case handling. In February, we launched next edit suggestions (NES), a custom Copilot model that predicts the next logical edit based on the code you’ve already written. Since launch, we’ve shipped several major model updates, including the newest release earlier this month.

In this post, we’ll look at how we built the original model, how we’ve improved it over time, what’s new, and what we’re building next.

Predicting the next edit is a harder problem than predicting the next token. NES has to understand what you’re doing, why you’re doing it, and what you’ll likely do next. That means:

Frontier models didn’t meet our quality and latency expectations. The smaller ones were fast but produced low-quality suggestions, while the larger ones were accurate but too slow for an in-editor experience. To get both speed and quality, we needed to train a custom model.

NES isn’t a general-purpose chat model. It’s a low-latency, task-specific model that runs alongside the editor and responds in real time. It’s the result of aligning model training, prompting, and UX around a single goal: seamless editing inside the IDE. That required tight coordination between model training, prompt design, UX design, and the VS Code team—the model only works because the system was co-designed end-to-end.

This “AI-native” approach where every part of the experience evolves together is very different from training a general-purpose model for any task or prompt. It’s how we believe AI features should be built: end to end, with the developer experience at the center.

The hard part wasn’t the architecture; it was the data. We needed a model that could predict the next edit a developer might make, but no existing dataset captured real-time editing behavior.

Our first attempt used internal pull request data. It seemed reasonable: pull requests contain diffs, and diffs look like edits. But internal testing revealed limitations. The model behaved overly cautiously—reluctant to touch unfinished code, hesitant to suggest changes to the line a user was typing, and often chose to do nothing. In practice, it performed worse than a vanilla LLM.

That failure made the requirement clear: we needed data that reflected how developers actually edit code in the editor, not how code looks after review.

Pull request data wasn’t enough because it:

So we reset our approach and built a much richer dataset by performing a large-scale custom data collection effort that captured code editing sessions from a set of internal volunteers. We found data quality to be key at this stage: a smaller volume of high-quality edit data led to better models than those trained with a larger volume of data that was less curated.

Supervised fine-tuning (SFT) of a model on this custom dataset produced the first model to outperform the vanilla models. This initial model provided a significant lift to quality and served as a foundation for the next several NES releases.

After developing several successful NES models with SFT, we focused on two key limitations of our training approach:

To address these two limitations, we turned to reinforcement learning (RL) techniques to further refine our model. Starting with the well-trained NES model from SFT, we optimized the model using a broader set of unlabeled data by designing a grader capable of accurately judging the quality of the model’s edit suggestions. This allows us to refine the model outputs and achieve higher model quality.

The key ideas in the grader design can be summarized as follows:

Continued post-training with RL has improved the model’s generalization capability. Specifically, RL extends training to unsupervised data, expanding the volume and diversity of data that we have available for training and removing the requirement that the ground truth next edit is known. This ensures that the training process consistently explores harder cases and prevents the model from collapsing into simple scenarios.

Additionally, RL allows us to define our preferences through the grader, enabling us to explicitly establish criteria for “bad edit suggestions.” This enables the trained model to better avoid generating bad edit suggestions when faced with out-of-distribution cases.

Our most recent NES release builds on that foundation with improvements to data, prompts, and architecture:

We train dozens of model candidates per month to ensure the version we ship offers the best experience possible. We modify our training data, adapt our training approach, experiment with new base models, and target fixes for specific feedback we receive from developers. Every new model goes through three stages of evaluation: offline testing, internal dogfooding, and online A/B experiments.

Since shipping the initial NES model earlier this year, we’ve rolled out three major model updates with each balancing speed and precision.

The table below summarizes the quality metrics measured for each release. We measure the rate at which suggestions are shown to developers, the rate at which developers accept suggestions, and the rate at which developers hide the suggestion from the UI. These are A/B test results comparing the current release with production.

| Release | Shown rate | Acceptance rate | Hide rate |

|---|---|---|---|

| April | +17.9% | +10.0% | -17.5% |

| May | -18.8% | +23.2% | -20.0% |

| November | -24.5% | +26.5% | -25.6% |

Developer feedback has guided almost every change we’ve made to NES. Early on, developers told us the model sometimes felt too eager and suggested edits before they wanted them. Others asked for the opposite: a more assertive experience where NES jumps in immediately and continuously. Like the tabs-vs-spaces debate, there’s no universal preference, and “helpful” looks different depending on the developer.

So far, we’ve focused on shipping a default experience that works well for most people, but that balance has shifted over time based on real usage patterns:

Looking ahead, we’re exploring adaptive behavior where NES adjusts to each developer’s editing style over time, becoming more aggressive or more restrained based on interaction patterns (e.g., accepting, dismissing, or ignoring suggestions). That work is ongoing, but it’s directly informed by the feedback we receive today.

As always, we build this with you. If you have thoughts on NES, our team would love to hear from you! File an issue in our repository or submit feedback directly to VS Code.

Here’s what we’re building:

To experience the newest NES model, make sure you have the latest version of VS Code (and the Copilot Chat extension), then ensure NES is enabled in your VS Code settings.

Acknowledgements

A special thanks to Yuting Sun (CoreAI Post Training), Zeqi Lin (Core AI Post Training), Alexandru Dima (VS Code), Brigit Murtaugh (VS Code), and Soojin Choi (GitHub Copilot) for contributing to this blog post. We would also like to express our gratitude to the developer community for their continued engagement and feedback as we improve NES. Also, a massive thanks to all the researchers, engineers, product managers, and designers across GitHub and Microsoft who contributed (and continue to contribute) to model training, client development, infrastructure, and testing.

Staff Manager, Applied Science, GitHub Code|AI. I lead an applied research team to fine-tune custom models for GitHub Copilot and develop agentic tools and evaluation pipelines.

Principal Applied Science Manager, Microsoft Code|AI. I lead an applied research team focused on improving developer productivity with AI through intelligent code models and code agents. Our team also optimizes the Copilot experience by taking innovations from research into product.

A hands-on guide to using GitHub Copilot CLI to move from intent to reviewable changes, and how that work flows naturally into your IDE and GitHub.

GitHub Copilot coding agent now includes a model picker, self-review, built-in security scanning, custom agents, and CLI handoff. Here’s what’s new and how to use it.

Most multi-agent workflow failures come down to missing structure, not model capability. Learn the three engineering patterns that make agent systems reliable.

Discover tips, technical guides, and best practices in our biweekly newsletter just for devs.