Get started with GitHub Copilot >

Beyond prompt crafting: How to be a better partner for your AI pair programmer

Ensuring quality code suggestions from Copilot goes beyond the perfect prompt. Context is key to success when working with your AI pair programmer.

When a developer first starts working with GitHub Copilot there’s (rightly) a focus on prompt crafting — or the art of providing good context and information to generate quality suggestions.

But context goes beyond typing out a couple of lines into Copilot Chat in VS Code. We want to ensure Copilot is considering the right files when performing operations, that these files are easy for Copilot to read, and that we provide Copilot any extra guidance it may need about the project or specific task.

So let’s explore taking the next step beyond prompt crafting, and think about how we can be a better partner for our AI pair programmer.

Context is key

I always like to talk about context by starting with a story. The other day my partner and I woke up and she said, “Let’s go to brunch!” Fantastic! Who doesn’t love brunch?

I recommended a spot, one of our favorites, and she said, “You know… we’ve been there quite a bit lately. I’d like to try something different.” I recommended another spot to which she replied, “Now that I’m thinking about it, I really want waffles. Let’s find somewhere that does good waffles.”

This conversation is, of course, pretty normal. My partner asked a question, I responded, she provided more context, and back and forth we went. All of my suggestions were perfectly reasonable based on the information I had, and when she didn’t hear what she was expecting she provided a bit more guidance. As we continued talking, she realized she had a craving for waffles, which she discovered as she considered my suggestions.

This is very much how we both talk with other people, but also how we approach working with generative AI tools, including Copilot. We ask questions, get answers, and work back and forth providing more context and making decisions based on what we see.

If we don’t receive the suggestions we’re expecting, or if something isn’t built to the specs we had in mind, it’s very likely Copilot didn’t have the context it needed — just as I didn’t have the context to suggest somewhere new when I started the conversation with my partner.

How GitHub Copilot works with code

To understand how Copilot in the IDE gets its context, it’s important to understand how it works. Except for agent mode, which performs external tasks, Copilot doesn’t build or run the code as it generates code suggestions. In fact, it behaves very similarly to, well, a pair programmer. It reads the code (and comments) of the files we’ve pointed it at just as another developer would.

But unlike a teammate, Copilot doesn’t have “institutional knowledge,” or the background information that comes with experience (although you can add custom instructions, but more on that later). This could be the history of why things were built a certain way (which isn’t documented somewhere but everyone just “knows” 🙄), that an internal library or framework that should always be used, or patterns that need to be followed.

Obviously, all of this background info is important to get the right code suggestions from Copilot. If we’re using a data abstraction layer (DAL), for instance, but Copilot is generating raw SQL code, the suggestions aren’t going to be that helpful.

The problem isn’t that Copilot is generating invalid code. Instead, it’s lacking the context to generate the code in the format and structure we need. Basically, we want waffles and it’s giving us an omelette. Let’s see what we can do to get waffles.

Using code comments to improve Copilot’s suggestions through better context

There’s a common belief that quality code shouldn’t need comments, and that adding comments is a “code smell,” or an indication that something could be improved. While it’s noble to strive to write code that’s as readable as possible, it’s something we often fall short of in our day-to-day work.

Even when we do “hit the mark”, we need to remember that just because code might be readable to one developer, it doesn’t mean it’s readable to all developers. A couple of lines of comments can go a long way to ensuring readability.

The same holds true for Copilot! As we highlighted above, Copilot doesn’t run or compile your code except in specific situations. Instead, it “reads” your code much like a developer would.

Following the guidelines for having docstrings in functions/modules in Python, for example, can help ensure Copilot has a better understanding of what the code does and how it does it. This allows Copilot to generate higher-quality suggestions by using your existing code to ensure any new code follows the same patterns and practices already in place.

| 💡 Pro tip: When you open a file, it’s always a good idea to leave it in a better state than when you found it. One of the little improvements you could make is to add a few comments to places to help describe the code. You could always ask Copilot to generate the first draft of the comments, and you can add any additional details Copilot missed! |

The benefit of using custom instructions with GitHub Copilot on your projects

To generate quality suggestions, Copilot benefits from having context around what you’re doing and how you’re doing it. Knowing the technology and frameworks you’re using, what coding standards to follow, and even some background on what you’re building helps Copilot raise the quality bar on its suggestions. This is where custom instructions come into play.

Custom instructions help you provide all of this background information and set ground rules (things like what APIs you want to call, naming patterns you want followed, or even stylistic preferences).

To get started, you place everything that’s important into a file named copilot-instructions.md inside your .github folder. It’s a markdown file, so you can create sections like Project structure, Technologies, Coding standards, and any other notes you want Copilot to consider on every single chat request. You can also add any guidance on tasks where you see Copilot not always choosing the right path, like maybe using class-based React components instead of function-based components (all the cool kids are using function based-components).

Keep in mind that custom instructions are sent to Copilot on every single chat request. You want to keep your instructions limited to information that’s relevant to the entire project. Providing too many details can make it a bit harder for Copilot to determine what’s important.

In simpler terms, you can basically think about the one friend you have who maybe shares too much detail when they’re telling a story, and how it can make it tricky to focus on the main plot points. It’s the same thing with Copilot. Provide some project-level guidance and overviews, so it best understands the environment in which it’s working.

A good rule of thumb is to have sections that highlight the various aspects of your project. An outline for a monorepo with a client and server for a web app might look like this:

# Tailspin Toys Crowd Funding

Website for crowd funding for games.

## Backend

The backend is written using:

- Flask for the API

- SQLAlchemy for the ORM

- SQLite for the database

## Frontend

The frontend is written using:

- Astro for routing

- Svelte for the components and interactivity

- Tailwind CSS for styling

## Code standards

- Use good variable names, avoiding abbreviations and single letter variables

- Use the casing standard for the language in question (camelCasing for TypeScript, snake_casing for Python, etc.)

- Use type hints in all languages which support them

## Project structure

- `client` contains the frontend code

- `docs` contains the documentation for the project

- `scripts` contains the scripts used to install services, start the app, and run tests

- `server` contains the backend code This is relatively abbreviated, but notice the structure. We’re telling Copilot about the project we have, its structure, the techs in use, and some guidance about how we want our code to be created. We don’t have anything specific to tasks, like maybe writing unit tests, because we have another way to tell Copilot that information!

Providing specific instructions for specific tasks

Continuing our conversation about instruction files… (I’m Gen-X, so I’m required to use the Gen-X ellipsis at least once.)

VS Code and Codespaces also support .instructions.md files. These are just like the copilot-instructions.md file we spoke about previously, only they’re designed to be used for specific types of tasks and placed in .github/instructions.

Consider a project where you’re building out Flask Blueprints for the routes for an API. There might be requirements around how the file should be structured and how unit tests should be created. You can create a custom instructions file called flask-endpoint.instructions.md, place it in .github/instructions, then add it as context to the chat when you request Copilot to create a new endpoint. It might look something like:

# Endpoint creation guidelines

## Endpoint notes

- Endpoints are created in Flask using blueprints

- Create a centralized function for accessing data

- All endpoints require tests

- Use the `unittest` module for testing

- All tests must pass

- A script is provided to run tests at `scripts/run-server-tests.sh`

## Project notes

- The Python virtual environment is located in the root of the project in a **venv** folder

- Register all blueprints in `server/app.py`

- Use the [test instructions](./python-tests.instructions.md) when creating tests

## Prototype files

- [Endpoint prototype](../../server/routes/games.py)

- [Tests prototype](../../server/tests/test_games.py)Notice how we’re providing specific information about how we want our endpoints created. You’ll also notice we’re even linking out to other files in the project using hyperlinks — both existing files for Copilot to use as representative examples, and other instructions files for more information.

Additionally, you can also apply instructions to file types based on a pattern. Let’s take the tests for example. If they were all located in server/tests, and started with test_, you could add metadata to the top to ensure Copilot always includes the instructions when working on a test file:

---

applyTo: server/tests/test_*.py

---This gives you a lot of flexibility in ensuring Copilot is able to access the right information at the right time. This can be done explicitly by adding in the instructions file, or implicitly by providing a pattern for Copilot to use when building certain files.

Just as before, these are artifacts in your repository. It can take some time to build a collection of instruction files, but that investment will pay off in the form of higher-quality code and, in turn, improved productivity.

Fully reusable prompts

The VS Code team recently published a new, experimental feature called prompt files. Because they’re still in development I don’t want to dig too deep into them, but you can read more about prompt files in the docs and see how to utilize them as they are currently implemented. In a nutshell, they allow you to effectively create scripted prompts for Copilot. You can choose the Copilot modes they’re available in (ask, edit and agent), the tools to be called, and what questions to ask the developer. These can be created by the team for enhanced reuse and consistency.

Extending GitHub Copilot’s capabilities with Model Context Protocol (MCP)

In an ever changing software development landscape, we need to ensure the information we’re working with is accurate, relevant, and up to date. This is what MCP, or Model Context Protocol, is built for! Developed initially by Anthropic, MCP is an open source protocol that lets organizations expose their services or data to generative AI tools.

When you add an MCP server to your IDE, you allow Copilot to “phone a friend” to find information, or even perform tasks on your behalf. For example, the Playwright MCP server helps create Playwright end-to-end tests, while the GitHub MCP server provides access to GitHub services like repositories, issues, and pull requests.

Let’s say, for instance, that you added the Playwright MCP server to your IDE. When you ask Copilot to create a new test to validate functionality on your website, Copilot can consult an authoritative source, allowing it to generate the best code it can.

You can even create your own MCP servers! One question I commonly hear is about how you can allow Copilot to look through an internal codebase or suite of libraries. With a custom MCP server, you could provide a facade for Copilot to perform these types of queries, then utilize the information discovered to suggest code based on your internal environment.

MCP is large enough to have its own blog post, which my colleague Cassidy wrote, sharing tips, tricks, and insights about MCP.

Thinking beyond prompts

Let me be clear: prompt crafting is important. It’s one of the first skills any developer should learn when they begin using GitHub Copilot.

But writing a good prompt is only one piece Copilot considers when generating an answer. By using the best practices highlighted above — comments and good code, custom instructions, and MCP servers — you can help Copilot understand what you want it to do and how you want it to do it. To bring it back to my analogy, you can ensure Copilot knows when you want waffles instead of omelettes.

And on that note, I’m off to brunch.

Tags:

Written by

Related posts

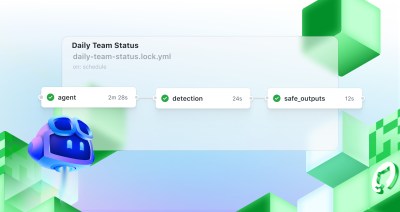

Automate repository tasks with GitHub Agentic Workflows

Discover GitHub Agentic Workflows, now in technical preview. Build automations using coding agents in GitHub Actions to handle triage, documentation, code quality, and more.

Continuous AI in practice: What developers can automate today with agentic CI

Think of Continuous AI as background agents that operate in your repository for tasks that require reasoning.

How to maximize GitHub Copilot’s agentic capabilities

A senior engineer’s guide to architecting and extending Copilot’s real-world applications.