A developer’s second brain: Reducing complexity through partnership with AI

As we look to empower developers with AI tools, we inadvertently integrate AI deeper into the way developers work. How do developers feel about that? And what are the most impactful ways to introduce more AI into workflows? We recently conducted 25 in-depth interviews with developers to understand exactly that.

As adoption of AI tools expands and the technology evolves, so do developers’ expectations and perspectives. Last year, our research showed that letting GitHub Copilot shoulder boring and repetitive work reduced cognitive load, freed up time, and brought delight to developers. A year later, we’ve seen the broad adoption of ChatGPT, an explosion of new and better models, and AI agents are now the talk of the industry. What is the next opportunity to provide value for developers through the use of AI? How do developers feel about working more closely with AI? And how do we integrate AI into workflows in a way that elevates developers’ work and identity?

The deeper integration of AI in developers’ workflows represents a major change to how they work. At GitHub Next we recently interviewed 25 developers to build a solid qualitative understanding of their perspective. We can’t measure what we don’t understand (or we can measure it wrong), so this qualitative deep dive is essential before we develop metrics and statistics. The clear signal we got about developers’ motivations and openness is already informing our plans, vision, and perspective, and today we are sharing it to inform yours, too. Let’s see what we found!

Finding 1: Cognitive burden is real, and developers experience it in two ways

The mentally taxing tasks developers talked about fell into two categories:

- “This is so tedious”: repetitive, boilerplate, and uninteresting tasks. Developers view these tasks as not worth their time, and therefore, ripe for automation.

- “This hurts my brain”: challenging yet interesting, fun, and engaging tasks. Developers see these as the core tasks of programming. They call for learning, problem solving and figuring things out, all of which help them grow as engineers.

AI is already making the tedious work less taxing. Tools like GitHub Copilot are being “a second pair of hands” for developers to speed them through the uninteresting work. They report higher satisfaction from spending more of their energy on interesting work. Achievement unlocked!

But what about the cognitive burden incurred by tasks that are legitimately complex and interesting? This burden manifests as an overwhelming level of difficulty which can discourage a developer from attempting the task. One of our interviewees described the experience: _“Making you feel like you can’t think and [can’t] be as productive as you would be, and having mental blockers and distractions that prevent you from solving problems.”_That’s not a happy state for developers.

Even with the advances of the last two years, AI has an opportunity to provide fresh value to developers. The paradigm for AI tools shifts from “a second pair of hands” to “a second brain,” augmenting developers’ thinking, lowering the mental tax of advanced tasks, and helping developers tackle complexity.

Where do developers stand on partnering with AI to tackle more complex tasks?

Finding 2: Developers are eager for AI assistance in complex tasks, but we have to get the boundaries right

The potential value of helping developers with complex tasks is high, but it’s tricky to get right. In contrast to tedious tasks, developers feel a strong attachment to complex or advanced programming tasks. They see themselves as ultimately responsible for solving complex problems. It is through working on these tasks that they learn, provide value, and gain an understanding of large systems, enabling them to maintain and expand those systems. This developer perspective is critical; it influences how open developers are to the involvement of AI in their workflows, and in what ways. And it sets a clear—though open-ended—goal for us to build a good “developer-AI partnership” and figure out how AI can augment developers during complex tasks, without compromising their understanding, learning, or identity.

Another observation in the interviews was that developers are not expecting perfection from AI today—an answer that perhaps would have been different 12 months ago. What’s more, developers see themselves as supervising and guiding the AI tools to produce the appropriate-for-them output. Today that process can still be frustrating—and at times, counterproductive—but developers’ view this process as paying dividends long-term as developers and AI tools adapt to each other and work in partnership.

Finding 3: Complex tasks have four parts

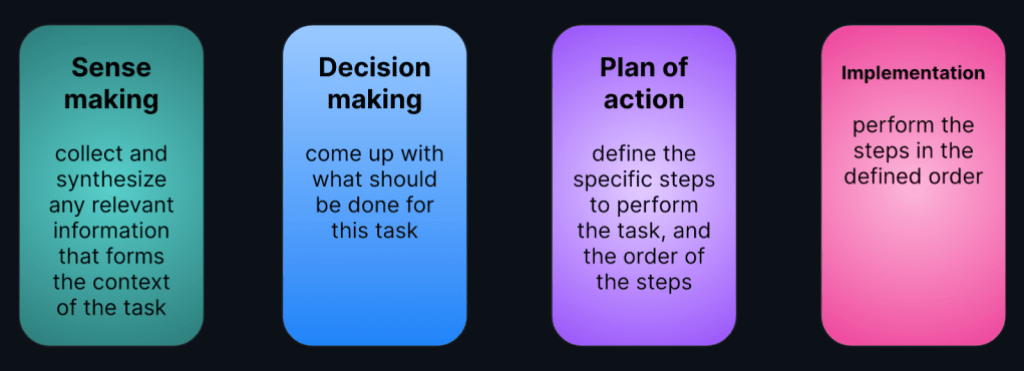

At this point, we have to introduce some nuances to help us think about what the developer-AI partnership and its boundaries might look like. We talk about tasks as whole units of work, but there is a lot that goes on, so let’s give things a bit of structure. We used the following framework that recognizes four parts to a task:

This framework (slightly adapted) comes from earlier research on automation allocation logic and the interface of humans and AI during various tasks. The framework’s history, and the fact that it resonated with all our interviewees, makes us confident that it’s a helpful way to think about complex software development tasks. Developers may not always enjoy such a neatly linear process, but this is a useful mechanism to understand where AI assistance can have the most impact for developers. The question is where are developers facing challenges, and how open they are to input and help from AI.

Finding 4: Developers are open to AI assistance with sense making and with a plan of action

Developers want to get to context fast but need to find and ingest a lot of information, and often they are not sure where to begin. “The AI agent is way more efficient to do that,” one of the interviewees said, echoed by many others. At this stage, AI assistance can take the form of parsing a lot of information, synthesizing it, and surfacing highlights to focus the developer’s attention. While developers were eager to get AI assistance with the sense making process, they pointed out that they still want to have oversight. They want to see what sources the AI tool is using, and be able to input additional sources that are situationally relevant or unknown to the AI. An interviewee put it like this: “There’s context in what humans know that without it AI tools wouldn’t suggest something valuable.”

Developers also find it overwhelming to determine the specific steps to solve a problem or perform a task. This activity is inherently open-ended—developers suffer from cognitive load as they evaluate different courses of actions and attempt to reason about tradeoffs, implications, and the relative importance of tighter scope (for example, solving this problem now) versus broader scope (for example, investing more effort now to produce a more durable solution). Developers are looking for AI input here to get them past the intimidation of the blank canvas. Can AI propose a plan—or more than one—and argue the pros and cons of each? Developers want to skip over the initial brainstorming and start with some strawman options to evaluate, or use as prompts for further brainstorming. As with the process of sense making, developers still want to exercise oversight over the AI, and be able to edit or cherry-pick steps in the plan.

Finding 5: Developers are cautious about AI autonomy in decision making or implementation

While there are areas where developers welcome AI input, it is equally important to understand where they are skeptical about it, and why.

Perhaps unsurprisingly, developers want to retain control of all decision making while they work on complex tasks and large changes. We mentioned earlier how developers’ identity is tied to complex programming tasks and problems, and that they see themselves ultimately responsible and accountable for them. As such, while AI tools can be helpful by simplifying context and providing alternatives, developers want to retain executive oversight of all decisions.

Developers were also hesitant to let AI tools handle implementation autonomously. There were two concerns at the root of developers’ reluctance:

- Today’s AI is perceived as insufficiently reliable to handle implementation autonomously. That’s a fair point; we have seen many examples of models providing inaccurate results to even trivial questions and tasks. It may also be a reflection of the technical limitations today. As models and capabilities improve, developers’ perceptions may shift.

- AI is perceived as a threat to the value of developers. There was concern that autonomous implementation removes the value developers contribute today, in addition to compromising their understanding of code and learning opportunities. This suggests a design goal for AI tools: aiding developers to acquire and refresh mental models quickly, and enabling them to pivot in and out of implementation details. These tools must aid learning, even as they implement changes on behalf of the developer.

What do the findings mean for developers?

The first wave of AI tools provide a second pair of hands for developers, bringing them the delight of doing less boilerplate work while saving them time. As we look forward, saving developers mental energy—an equally finite and critical resource—is the next frontier. We must help developers tackle complexity by also arming them with a second brain. Unlocking developer happiness seems to be correlated with experiencing lower cognitive burden. AI tools and agents lower the barriers to creation and experimentation in software development through the use of natural language as well as techniques that conserve developers’ attention for the tasks which remain the province of humans.

We anticipate that partnership with AI will naturally result in developers shifting up a level of abstraction in how they think and work. Developers will likely become “systems thinkers,” focusing on specifying the behavior of systems and applications that solve problems and address opportunities, steering and supervising what AI tools produce, and intervening when they have to. Systems thinking has always been a virtuous quality of software developers, but it is frequently viewed as the responsibility of experienced developers. As the mechanical work of development is transferred from developers to AI tooling, systems thinking will become a skill that developers can exercise earlier in their careers, accelerating their growth. Such a path will not only enable more developers to tackle increasing complexity, but will also create clear boundaries between their value/identity and the role that AI tools play in their workflow.

We recently discussed these implications for developers in a panel at GitHub Universe 2023. Check out the recording for a more thorough view!

How are we using these findings?

Based on the findings from our interviews, we realize that a successful developer-AI partnership is one that plays to the strengths of each partner. AI tools and models today have efficiency advantages in parsing, summarizing, and synthesizing a lot of information quickly. Additionally, we can leverage AI agents to recommend and critique plans of action for complex tasks. Combined, these two AI affordances can provide developers with an AI-native workflow that lowers the high mental tax at the start of tasks, and helps tackle the complexity of making larger changes to a codebase. On the other side of the partnership, developers remain the best judges of whether a proposed course of action is the best one. Developers also have situational and contextual knowledge that makes their decisions and implementation direction unique, and the ideal reference point for AI assistance.

At the same time, we realize from the interviews how critical steerability and transparency are for developers when it comes to working with AI tools. When developers envision deeper, more meaningful integration of AI into their workflows, they envision AI tools that help them to think, but do not think for them. They envision AI tools that are involved in the act of sense making and crafting plans of action, but do not perform actions without oversight, consent, review, or approval. It is this transparency and steerability that will keep developers in the loop and in control even as AI tools become capable of more autonomous action.

Finally, there is a lot of room for AI tools to earn developers’ trust in their output. This trust is not established today, and will take some time to build, provided that AI tools demonstrate reliable behavior. As one of our interviewees described it: “The AI shouldn’t have full autonomy to do whatever it sees best. Once the AI has a better understanding, you can give more control to the AI agent.” In the meantime, it is critical that developers can easily validate any AI-suggested changes—“The AI agent needs to sell you on the approach. It would be nice if you could have a virtual run through of the execution of the plan,” our interviewee continued.

These design principles—derived from the developer interviews—are informing how we are building Copilot Workspace at GitHub Next. Copilot Workspace is our vision of a developer partnering with AI from a task description all the way to the implementation that becomes a pull request. Context is derived from everything contained in the task description, supporting developers’ sense making, and the AI agent in Copilot Workspace proposes a plan of action. To ensure steerability and transparency, developers can edit the plan and, once they choose to implement it, they can inspect and edit all the Copilot-suggested changes. Copilot Workspace also supports validating the changes by building and testing them. The workflow ends—as it typically would—with the developer creating a pull request to share their changes with the rest of their team for review.

This is just the beginning of our vision. Empowering developers with AI manifests differently over time, as tools get normalized, AI capabilities expand, and developers’ behavior adapts. The next wave of value will come from evolving AI tools to be a second brain, through natural language, AI agents, visual programming, and other advancements. As we bring new workflows to developers, we remain vigilant about not overstepping. Software creation will change sooner than we think, and our goal is to reinforce developers’ ownership, understanding, and learning of code and systems in new ways as well. As we make consequential technical leaps forward we also remain user-centric—listening to and understanding developers’ sentiment and needs, informing our own perspective as we go.

Who did we interview?

In this round of interviews, we recruited 25 US-based participants, working full-time as software engineers. Eighteen of the interviewees (72%) were favorable towards AI tools, while seven interviewees (28%) self-identified as AI skeptics. Participants worked in organizations of various sizes (64% in Large or Extra-Large Enterprises, 32% in Small or Medium Enterprises, and 4% in a startup). Finally, we recruited participants across the spectrum of years of professional experience (32% had 0-5 years experience, 44% had 6-10 years, 16% had 11-15 years, and 8% had over 16 years of experience).

We are grateful to all the developers who participated in the interviews—your input is invaluable as we continue to invest in the AI-powered developer experience of tomorrow.

Tags:

Written by

Related posts

GitHub Availability Report: June 2025

In June, we experienced three incidents that resulted in degraded performance across GitHub services.

From pair to peer programmer: Our vision for agentic workflows in GitHub Copilot

AI agents in GitHub Copilot don’t just assist developers but actively solve problems through multi-step reasoning and execution. Here’s what that means.

GitHub Availability Report: May 2025

In May, we experienced three incidents that resulted in degraded performance across GitHub services.