GitHub Actions self-hosted runners on Google Cloud

Learn about patterns for configuring and maintaining GitHub Actions self-hosted runners on Google Cloud.

This post is co-authored with Bharath Baiju and Seth Vargo from Google Cloud.

Introduction

GitHub Actions help you automate your software development workflows. You’re probably already familiar with the built-in runners for Windows, Linux, and macOS, but what if your workloads require custom hardware, a specific operating system, or software tools that aren’t available on these runners?

Self-hosted runners give you freedom, flexibility, and complete control over your GitHub Actions execution environment. These runners can be physical servers, virtual machines, or container images, and they can run on-premises, on a public cloud like Google Cloud, or on both using a platform like Anthos. Here are some use cases for GitHub Actions self-hosted runners:

- Run with more memory and CPU resources for faster build and test times.

- Leverage access to custom hardware like GPUs and TPUs.

- Choose different processor architectures like ARM.

- Interact with the physical world using ROS and serial-attached robots.

- Access information only available within your organization’s security perimeter like credentials or certificates.

- Integrate with “behind the firewall” resources and other non-external systems.

This post explores patterns for configuring and maintaining GitHub Actions self-hosted runners on Google Cloud.

⚠️ Note that these use cases are considered experimental and not officially supported by GitHub at this time. Additionally, it’s recommended not to use self-hosted runners on public repositories for a number of security reasons.

Scalable runners with App Engine

The first pattern leverages the ephemeral and scalable nature of containers to create on-demand, self-hosted GitHub Actions runners using App Engine. In this model, you build and run a custom container image, and App Engine keeps the runner running. Furthermore, you can configure App Engine to automatically scale the number of runners, which could be useful in rapid iteration environments.

To get started, first create a Dockerfile that installs the GitHub Actions runner into a Docker container:

FROM ubuntu:18.04

ENV RUNNER_VERSION=2.263.0

RUN useradd -m actions

RUN apt-get -yqq update && apt-get install -yqq curl jq wget

RUN \

LABEL="$(curl -s -X GET 'https://api.github.com/repos/actions/runner/releases/latest' | jq -r '.tag_name')" \

RUNNER_VERSION="$(echo ${latest_version_label:1})" \

cd /home/actions && mkdir actions-runner && cd actions-runner \

&& wget https://github.com/actions/runner/releases/download/v${RUNNER_VERSION}/actions-runner-linux-x64-${RUNNER_VERSION}.tar.gz \

&& tar xzf ./actions-runner-linux-x64-${RUNNER_VERSION}.tar.gz

WORKDIR /home/actions/actions-runner

RUN chown -R actions ~actions && /home/actions/actions-runner/bin/installdependencies.sh

USER actions

COPY entrypoint.sh .

ENTRYPOINT ["./entrypoint.sh"]Next, define a small script that runs when the container boots to register the custom runner against your repository or organization:

#!/usr/bin/env bash

set -eEuo pipefail

TOKEN=$(curl -s -X POST -H "authorization: token ${TOKEN}" "https://api.github.com/repos/${OWNER}/${REPO}/actions/runners/registration-token" | jq -r .token)

cleanup() {

./config.sh remove --token "${TOKEN}"

}

./config.sh \

--url "https://github.com/${OWNER}/${REPO}" \

--token "${TOKEN}" \

--name "${NAME}" \

--unattended \

--work _work

./runsvc.sh

cleanup

Then create an app.yaml file to deploy the service:

---

service: github-actions

runtime: custom

env: flex

resources:

cpu: 2

memory_gb: 4

automatic_scaling:

min_num_instances: 1

max_num_instances: 10

cpu_utilization:

target_utilization: 0.8This configuration leverages elastic auto-scaling based on CPU usage, and you can connect to other resources with serverless VPC access. Unfortunately, App Engine cannot currently consume custom hardware like GPUs and TPUs, and it is not yet available on-premises. Additionally, AppEngine already runs as a container, it is not currently possible to use Docker-based actions (or actions that rely on the Docker daemon) with this setup.

Persistent runners on GCE

Using Compute Engine you can create self-hosted runners that suit your specific workflows ranging from custom machine sizes to GPU-accelerated VMs. Managed Instance Groups allow you to control these machines from a single pane of glass and offers additional features such as autoscaling based on usage. You can also take advantage of Application Default Credentials to automatically authenticate with Google Cloud APIs using the service account attached to the GCE VM without having to manage service account keys.

Example Managed Instance Groups runner: https://github.com/bharathkkb/gh-runners/tree/master/gce

Persistent runners on Kubernetes Engine

Since Google Kubernetes Engine (GKE) is a true Kubernetes installation, you can use any of the existing community patterns for deploying custom GitHub Action runners on Kubernetes with GKE.

Additionally, you can utilize GKE specific features like Workload Identity to authenticate with Google Cloud APIs using a Kubernetes Service Account bound to a Google Service Account. This reduces the additional overhead of having to create and manage a service account key. Unlike traditional service account keys which are valid for 10 years, Workload Identity credentials are only valid for a short time and reduce the operational burden of rotating these credentials.

Example GKE runner with Workload Identity: https://github.com/bharathkkb/gh-runners/tree/master/gke

Sample workflow: https://github.com/bharathkkb/gh-runners/tree/master/example-workflow

This solution is great if you’re already using a Kubernetes cluster in the cloud, but what if you need to access on-premises resources?

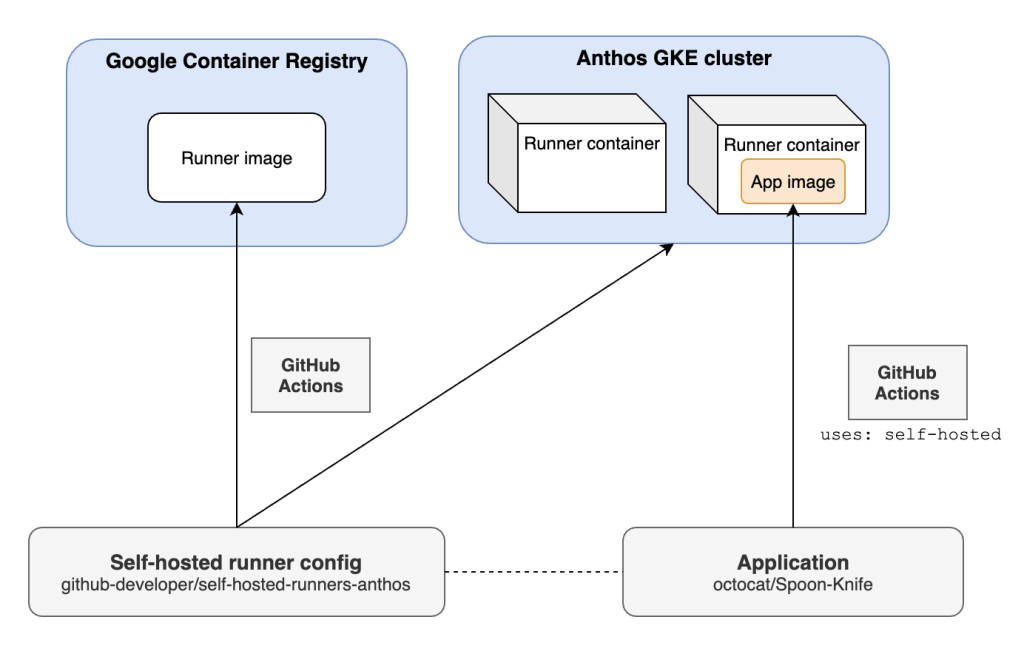

Hybrid runners with Anthos

Anthos lets you build, deploy, and manage applications anywhere in a secure, consistent manner. You can think of Anthos as a subset of the Google Cloud software stack that runs on infrastructure that you control–including on-premises. This final pattern shows how GitHub Actions and Anthos GKE can compose a containerized build pipeline that runs on your infrastructure. You can review the full code on GitHub.

The repository linked above includes the set of commands to run to provision an Anthos GKE cluster. It creates the Google Cloud project, enables all required services, and provisions a GKE cluster managed by Anthos. It also explains how to configure Kubernetes secrets that will provide the runner pod a TOKEN to (de)register itself and a GITHUB_REPO variable for which the runner(s) will be made available.

The Dockerfile is based on a general-purpose Ubuntu image, and downloads and installs dependencies including the runner itself. A test job waits for pull requests to run a test build, and another deploy job pushes to Google Container Registry and uses kustomize to apply the manifest to our Anthos GKE cluster, pulling in the image we just pushed to Container Registry.

Because we’ve used a Docker-in-Docker sidecar pod in this project, we can even use these self-hosted runners to run container builds. Though this approach offers build flexibility, it requires a privileged security context and therefore extends the trust boundary to the whole cluster. We recommend extra caution with this approach or removing the sidecar if your application doesn’t require containers.

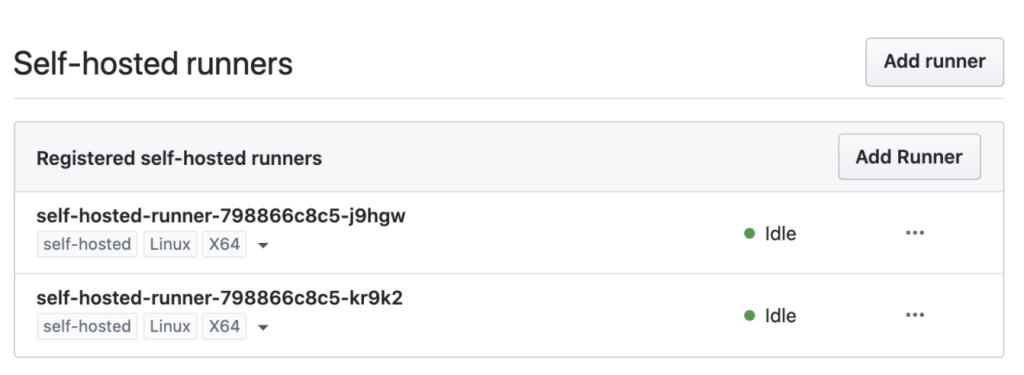

After the test and deploy jobs have completed, we can navigate to GITHUB_REPO’s settings page and see the newly provisioned runners:

It is currently not advised to scale down replicas as builds are happening, since it’s not yet feasible to select only the idle runners. You might consider scaling down runners off peak schedule, say late at night, and scaling them back up in the morning before builds are needed.

Summary

We have shown several benefits of using GitHub Actions self-hosted runners and examples of how you can get started with them today on a cloud provider like Google Cloud. Many thanks to our partners at Google Cloud (Bharath Baiju, Craig Barber, and Seth Vargo) for the collaboration opportunity. We look forward to seeing the amazing things the community builds!

Learn more about self-hosted runners

Tags:

Written by

Related posts

GitHub availability report: January 2026

In January, we experienced two incidents that resulted in degraded performance across GitHub services.

Pick your agent: Use Claude and Codex on Agent HQ

Claude by Anthropic and OpenAI Codex are now available in public preview on GitHub and VS Code with a Copilot Pro+ or Copilot Enterprise subscription. Here’s what you need to know and how to get started today.

What the fastest-growing tools reveal about how software is being built

What languages are growing fastest, and why? What about the projects that people are interested in the most? Where are new developers cutting their teeth? Let’s take a look at Octoverse data to find out.