Automating MySQL schema migrations with GitHub Actions and more

In this deep dive, we cover how our daily schema migrations amounted to a significant toil on the database infrastructure team, and how we searched for a solution to automate the manual parts of the process.

In the past year, GitHub engineers shipped GitHub Packages, Actions, Sponsors, Mobile, security advisories and updates, notifications, code navigation, and more. Needless to say, the development pace at GitHub is accelerated.

With MySQL serving our backends, updating code requires changes to the underlying database schema. New features may require new tables, columns, changes to existing columns or indexes, dropping unused tables, and so on. On average, we have two schema migrations running daily on our production servers. Some days we have a half dozen migrations to run. We’ll cover how this amounted to a significant toil on the database infrastructure team, and how we searched for a solution to automate the manual parts of the process.

What’s in a migration?

At first glance, migrating appears to be no more difficult than adding a CREATE, ALTER or DROP TABLE statement. At a closer look, the process is far more complex, and involves multiple owners, platforms, environments, and transitions between those pieces. Here’s the flow as we experience it at GitHub:

1. Starting the process

It begins with a developer who identifies the need for a schema change. Maybe they need a new table, or a new column in an existing table. The developer has a local testing environment where they can experiment however they like, until they’re satisfied and wish to apply changes to production.

2. Feedback and review

The developer doesn’t just apply their changes online. First, they seek review and discussion with their peers. Depending on the change, they may ask for a review from a group of schema reviewers (at GitHub, this is a volunteer group experienced with database design). Then, they seek the agreement of the database infrastructure team, who owns the production databases. The database infrastructure team reviews the changes, looking for performance concerns, among other potential issues. Assuming all reviews are favorable, it’s on the database infrastructure engineer to deploy the change to production.

3. Taking the change to production

At this point, we need to determine where the change is taking place since we have multiple clusters. Some of them are sharded, so we have to ask: Where do the affected tables exist in our clusters or schemas? Next, we need to know what to run. The developer presented the schema they want to see in production, but how do we transition the existing production schema into the one requested? What’s the formal CREATE, ALTER or DROP statement? Following what to run, we need to know how we should run the migration. Do we run the query directly? Or is it a blocking operation and we need an online schema change tool? And finally, we need to know when to execute the migration. Perhaps now is not a good time if there’s already a migration running on the cluster.

4. Migration

At long last, we’re ready to run the migration. Some of our larger tables may take hours and even days to migrate, especially since the site needs to be up and running. We want to track status. And we want to see what impact the migration may have on production, or, preferably, to ensure it does not have an impact.

5. Completing the process

Even as the migration completes there are further steps to take. There’s some cleanup process, and we want to unblock the next migration, if any currently exists. The database infrastructure team wishes to advertise to the developer that the changes have taken place, and the developer will have their own followup to address.

Throughout that flow, there’s a lot of potential for friction:

- Does the database infrastructure team review the developer’s request in a timely fashion?

- Is the review process productive?

- Do we need to wait for something before running the migration?

- Is the database infrastructure engineer actually available to run the migration, or perhaps they’re busy with other tasks?

The database infrastructure engineer needs to either create or review the migration statement, double-check their logic, ensure they can begin the migration, follow up, unblock other migrations as needed, advertise progress to the developer, and so on.

With our volume of daily migrations, this flow sometimes consumed hours of work of a database infrastructure engineer per day, and—in the best-case scenario—at least several hours of work per week. They would frequently multitask between two or three migrations and keep mental notes for next steps. Developers would ping us to ask what the status was, and their work was sometimes blocked until the migration was complete.

A brief history of schema migration automation at GitHub

GitHub was originally created as a Ruby on Rails (RoR) app. Like other frameworks, and in particular, those using Active Record, RoR has a built-in mechanism to generate database schema from code, as well as programmatically express migrations. RoR tooling can analyze code changes and create and run the SQL statements to change the database schema.

We use the GitHub flow to manage our own development: when suggesting a change, we create a branch, commit, push, and open a pull request. We use the declarative approach to schema definition: our RoR GitHub repository contains the full schema definition, such as the CREATE TABLE statements that generate the complete schema. This way, we know exactly what schema is associated with each commit or branch. Counter that with the programmatic approach, where your commits contain migration statements, and where to deduce a schema you need to start at some baseline and run through all statements sequentially.

The database infrastructure and the application teams collaborated to create a set of chatops tooling. We ran a chatops command to list pull requests with schema changes, and then another command to generate the CREATE/ALTER/DROP statement for a given pull request. For this, we used RoR’s rake command. Our wrapper scripts then added meta information, like which cluster is involved, and generated a script used to run the migration.

The generated statements and script were mostly fine, with occasional SQL syntax errors. We’d review the output and fix it manually as needed.

A few years ago we developed gh-ost, an online table migration solution, which added even more visibility and control through our chatops. We’d check progress, change runtime configuration, and cut-over the migration through chat. While simple, these were still manual steps.

The heart of GitHub’s app remains with the same RoR, but we’ve expanded far beyond it. We created more repositories and some also use RoR, while others are in other programming languages such as Go. However, we didn’t use Object Relational Mapping practice with the new repositories.

As GitHub expanded, the more toil the database infrastructure team had. We’d review pull requests, compare schemas, generate migration statements manually, and verify on a local machine. Other than the git log, no formal tracking for schema migrations existed. We’d check in chat, issues, and pull requests to see what was done and what wasn’t. We’d keep track of ongoing migrations in our heads, context switch between the migrations throughout the day, and how often we’d get interrupted by notifications. And we did this while taking each migration through the next step, keeping mental notes, and communicating the progress to our peers.

With these steps in mind, we wanted a solution to automate the process. We came up with various ideas, and in 2019 GitHub Actions was released. This was our solution: multiple loosely coupled components, each owning a specific aspect of the flow, all orchestrated by a controller service. The next section covers the breakdown of our solution.

Code

Our basic premise is that schema design should be treated as code. We want the schema to be versioned, and we want to know what schema is associated and with what version of our code.

To illustrate, GitHub provides not only github.com, but also GitHub Enterprise, an on-premise solution. On github.com we run continuous deployments. With GitHub Enterprise, we make periodic releases, and our customers can upgrade in-house. This means we need to be able to reproduce any schema changes we make to github.com on a customer’s Enterprise server.

Therefore we must keep our schema design coupled with the code in the same git repository. For a developer to design a schema change, they need to follow our normal development flow: create a branch, commit, push, and open a pull request. The pull request is where code is reviewed and discussion takes place for any changes. It’s where continuous integration and testing run. Our solution revolves around the pull request, and this is standardized across all our repositories.

The change

Once a pull request is opened, we need to be able to identify what changes we’d like to make. Typically, when we review code changes, we look at the diff. And it might be tempting to expect that git diff can help us formalize the schema change. Unfortunately, this is not the case, and git diff is poor at identifying these changes. For example, consider this simplified table definition:

CREATE TABLE some_table (

id int(10) unsigned NOT NULL AUTO_INCREMENT,

hostname varchar(128) NOT NULL,

PRIMARY KEY (id),

KEY (hostname)

);Suppose we decide to add a new column and drop the index on hostname. The new schema becomes:

CREATE TABLE some_table (

id int(10) unsigned NOT NULL AUTO_INCREMENT,

hostname varchar(128) NOT NULL,

time_created TIMESTAMP NOT NULL,

PRIMARY KEY (id)

);

Running git diff on the two schemas yields the following:

@@ -1,6 +1,6 @@

CREATE TABLE some_table (

id int(10) unsigned NOT NULL DEFAULT 0,

hostname varchar(128) NOT NULL,

- PRIMARY KEY (id),

- KEY (hostname)

+ time_created TIMESTAMP NOT NULL,

+ PRIMARY KEY (id)

);

The pull request’s “Files changed” tab shows the same:

See how the PRIMARY KEY line goes into the diff because of the trailing comma. This diff does not capture the schema change well, and while RoR provides tooling for that, we’ve still had to carefully review them. Fortunately, there’s a good MySQL-oriented tool to do the task.

skeema

skeema is an open source schema management utility developed by Evan Elias. It expects the declarative approach, and looks for a schema definition on your file system (hopefully as part of your repository). The file system layout should include a directory per schema/database, a file per table, and then some special configuration files telling skeema the identities of, and the credentials for, MySQL servers in various environments. Skeema is able to run useful tasks, such as:

skeema diff: generate SQL statements that convert the existing database schema into the schema defined in the file system. This includes as manyCREATE, ALTERandDROP TABLEstatements as needed.skeema push: actually apply changes to the database server for the schema to match the one on file systemskeema pull: rewrite the filesystem schema based on the existing schema in the MySQL server.

skeema can do much more, including the ability to invoke online schema change tools—but that’s outside this post’s scope.

Git users will feel comfortable with skeema. Indeed, skeema works very well with git-versioned schemas. For us, the most valuable asset is its diff output: a well formed, reliable set of statements to show the SQL transition from one schema to another. For example, skeema diff output for the above schema change is:

USE `test`;

ALTER TABLE `some_table` ADD COLUMN `time_created` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP, DROP KEY `hostname`;

Note that the above is not only correct, but also formal. It reproduces correctly whether our code uses lower/upper case, includes/omits default value, etc.

We wanted to use skeema to tell us what statements we needed to run to get from our existing state into the state defined in the pull request. Assuming the master branch reflects our current production schema, this now becomes a matter of diffing the schemas between master and the pull request’s branch.

Skeema wasn’t without its challenges, and we had to figure out where to place skeema from a design perspective. Do the developers own it? Does every repository own it? Is there a central service to own it? Each presented its own problems, from false ownership to excessive responsibilities and access.

GitHub Actions

Enter GitHub Actions. With Actions, you’re able to run code as a response to events taking place in your repository. A new pull request, review, comment, issue, and quite a few others, are such events. The code (the action) is arbitrary, and GitHub spawns a container on its own infrastructure, where your code will run. What makes this extra interesting is that the container can get access to your repository. GitHub Actions implicitly receives an API token to interact with the repository.

The container comes with popular software packages pre-installed, such as a MySQL server.

Perhaps the most classic use of Actions is CI/CD. When a pull_request event occurs (a new pull request and any subsequent commit) run some code to build, test, lint, or validate the change. We took this approach to run skeema as part of a pull_request action flow, called skeema-diff.

Here’s a simplified breakdown of the action:

- Fetch skeema binary

- Checkout master branch

- Run

skeema pushto populate the container’s MySQL server with the schema as defined by the master branch - Checkout pull request’s branch

- Run

skeema diffto generate the statements that take the schema from the one in MySQL (remember, this is the master schema) to the one in the pull request’s branch - Add the diff as a comment in the pull request

- Add a special label to indicate this pull request has a schema change

The code is more complex than what we’ve shown. We actually use base and head instead of master and branch, and there’s some logic to formalize, edit and validate the diff, to handle commits that further change the schema, among other processes.

By now, we have a partial flow, which works entirely on GitHub’s platform:

- Schema change as code

- Review process, based on GitHub’s pull request flow

- Automated schema change analysis, based on skeema running in a GitHub Action

- A visible output, presented as a pull request comment

Up to this point, everything is constrained to the repository. The repository itself doesn’t have information about where the schema gets deployed in production. This information is something that’s outside the repository’s scope, and it’s owned by the database infrastructure team rather than the repository’s developers. Neither the repository nor any action running on that repository has access to production, nor should they, as that would be a breach of domains.

Before we describe how the schema gets to production, let’s jump ahead and discuss the schema migration itself.

Schema migrations and gh-ost

Even the simplest schema migration isn’t simple. We are concerned with three types of table migrations:

CREATE TABLEis the simplest and the safest. We created something that didn’t exist before, and its creation time is instantaneous. Note that if the target cluster is sharded, this must be applied on all shards. If the cluster is sharded with vitess, then the vitess vtgate service automatically handles this for us.DROP TABLEis a simple statement that comes with a great risk. What if it’s still in use and some code breaks as a result of the table going away? Note that we don’t actually drop tables as part of schema migrations. AnyDROP TABLEstatement is converted into aRENAME TABLE. Instead ofDROP TABLE repositories(whoops!), our automation runsRENAME TABLE repositories TO _repositories_DROP_20200101123456. If our application fails because of this, we have an instant revert command:RENAMEback to the original. Renamed tables are kept around for a few days prior to being garbage collected and dropped by our automation.ALTER TABLEis the most complex case, mainly because it takes time to alter a table. We don’t actuallyALTERtables in-place. We use gh-ost to emulate anALTER TABLE, and the end result is the same even though the process is completely different. It doesn’t lock our apps, throttles as much as needed, and it’s controllable as well as auditable. We’ve run gh-ost in production for over three and a half years. It has little to no impact on production, and we generally don’t care that it’s running. But some of our larger tables may still take hours or even days to migrate. We also only run oneALTER(or, gh-ost) at a time on a cluster. Concurrent migrations are possible but compete over resources, leading to overall longer runtimes than sequential execution. This means that anALTERmigration requires scheduling. We need to be able to tell if a migration is already running on a cluster, as well as prioritize and queue migrations that apply to the same cluster. We also need to be able to tell the status over the duration of hours or days, and this needs to be communicated to the developer, the owner of the change. And, if the cluster is sharded, we need to run the migration per shard.

In order to run a migration, we must first determine the strategy for that migration (Is it direct query, gh-ost, or a manual?). We need to be able to tell where it can run, how to go about the process if the cluster is sharded, as well as When to schedule it. While migrations can wait in queue while others are running, we want to be able to prioritize migrations, in case the queue is large.

skeefree

We created skeefree as the glue, which means it’s an orchestrating service that’s aware of our repositories, can communicate with our pull requests, knows about production (or, can get information about production) and which invokes the migrations. We run skeefree as a stateless kubernetes service, backed by a MySQL database that holds the state. Note that skeefree’s own schema is managed by skeefree.

skeefree uses GitHub’s API to interact with pull requests, GitHub’s internal inventory and discovery services, to locate clusters in production, and gh-ost to run migrations. Skeefree is best described by following a schema migration flow:

- A developer wishes to change the schema, so they open a pull request.

- skeema-diff Action springs to life and seeks a schema change. If a schema change isn’t found in the pull request, nothing happens. If there is a schema change, the Action, computes the change via skeema, adds a well-formed comment to the pull request indicating the change, and adds a migration:skeema:diff label to the pull request. This is done via the GitHub API.

- A developer looks into the change, and seeks review from a team member. At this time they may communicate to team members without actually going to production. Finally, they add the label migration:for:review.

- skeefree is aware of the developer’s repository and uses the GitHub API to periodically look for open pull requests, which are labeled by both migration:skeema:diff and migration:for:review, and have been approved by at least one developer.

- Once detected, skeefree investigates the pull request, and reads the schema change comment, generated by the Action. It maps the schema/repository to the schema/production cluster, and uses our inventory and discovery services to know if the cluster is sharded. Then, it finds the location and name of the cluster.

- skeefree then adds this to its backend database, and advertises its analysis on the pull request with another comment. This comment generally means “here’s what I will do if you approve”. And it proceeds to get a review from an authority.

- For most repositories, the authority is the database-infrastructure team. On our original RoR repository, we also seek review from a cross-functional team, known as the db-schema-reviewers, who are familiar with the general application and database design throughout the years and who have more context to offer. skeefree automatically knows which teams should be notified on which repositories.

- The relevant teams review and hopefully approve, and skeefree detects the approval, before choosing the proper strategy (direct query for

CREATEandDROP, orRENAME), and gh-ost forALTER. It then queues the migration(s). - skeefree’s scheduler periodically checks what next can be executed. Remember we only run a single

ALTERmigration on a given cluster at a time, but we also have a limited number of runner hosts. If there’s a free runner host and the cluster is not running any migration, skeefree then proceeds to kick off a migration. Skeefree advertises this fact as a pull request comment to notify the developer that the migration started. - Once the migration is complete, skeefree announces it in a pull request comment. The same applies should the migration fail.

- The pull request may also have more than one migration. Perhaps the cluster is sharded, or there may be multiple tables changed in the pull request. Once all migrations are successfully completed, skeefree advertises this in a pull request comment. The developer is notified that all migrations are done, and they’re encouraged to proceed with their standard deploy/merge flow.

Analysis of the flow

There are a few nuances here that make a good experience to everyone involved:

- The database infrastructure team doesn’t know about the pull request until the developer explicitly adds the migration:for:review label. It’s like a draft pull request or a pull request that’s a work in progress, only this flag applies specifically to the schema migration flow. This allows the developer to use their preferred flow, and communicate with their team without interrupting the database infrastructure team or getting premature reviews.

- The skeema analysis is contained within the repository, which means That no external service is required. The developer can check the diff result, themselves.

- The Action is the only part of the flow that looks at the code. Neither skeefree nor gh-ost look at the actual code, and they don’t need git access.

- The database infrastructure team only needs to take a single step, which is review the pull request.

- The developers own the creation of pull requests, getting peer reviews, and finally, deploying and merging. These are the exact operations that should be under their ownership. Moreover, they get visibility into the state of their migration. By looking at the pull request page or their GitHub notifications, they can tell whether the pull request has been reviewed, queued, started, completed, or failed. They don’t need to ask. Even better, we have chatops that give visibility into the overall state of migration queue, a running migration’s progress, and more. These chatops are available for all to invoke.

- The database infrastructure team owns the process of mapping the repository schema to production. This is done via chatops, but can also be completed via configuration. The team is able to cancel a pull request, retry a failed migration, and more.

- gh-ost is generally trusted, and we have control over a running migration. This means that we can force it to throttle, set up a different throttle threshold, make it use less resources, or terminate it, if needed. We also have a throttling mechanism throughout our stack, so that long running processes like migrations yield to higher priority operations, which extends their own runtime so it doesn’t generate too much load on our database servers.

- We use our own prefered pull request flow, oActions (skeefree was an early adopter for Actions), GitHub API, and our existing datacenter and database infrastructure, all of which are well understood internally.

Public availability

skeefree and the skeema-diff Action were authored internally at GitHub to solve a specific problem. skeefree uses our internal inventory and discovery services, it works with our chatops and uses some internal libraries.

Our experience in releasing open source software is that no one’s use case is exactly the same as ours. Our perception of an automated migrations flow may be very different from another organization’s perception. We still want to share more than just our words, so we’ve open sourced the code.

It’s a bit of a peculiar OSS release:

- it’s missing some libraries; it will not build.

- It expects some of our internal services to exist, which more than likely won’t be on your platform.

- It expects chatops, and you may not be using chatops.

- The code also needs to be rewritten for adaptation to your environment,

Note that the code is available, but not open for issues and pull requests. We hope the community finds it useful.

Tags:

Written by

Related posts

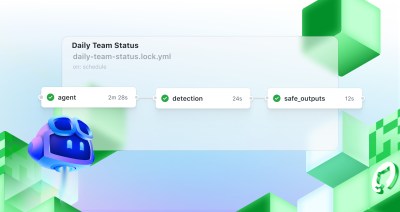

Automate repository tasks with GitHub Agentic Workflows

Discover GitHub Agentic Workflows, now in technical preview. Build automations using coding agents in GitHub Actions to handle triage, documentation, code quality, and more.

Level up design-to-code collaboration with GitHub’s open source Annotation Toolkit

Prevent accessibility issues before they reach production. The Annotation Toolkit brings clarity, compliance, and collaboration directly into your Figma workflow.

How to use the GitHub and JFrog integration for secure, traceable builds from commit to production

Connect commits to artifacts without switching tools.