Believe it or not, just over a year ago, GitHub Pages, the documentation hosting service that powers nearly three-quarters of a million sites, was little more than a 100-line shell script. Today, it’s a fully independent, feature-rich OAuth application that effortlessly handles well over a quarter million requests per minute. We wanted to take a look back at what we learned from leveling up the service over a six month period.

GitHub Pages is GitHub’s static-site hosting service. It’s used by government agencies like the White House to publish policy, by big companies like Microsoft, IBM, and Netflix to showcase their open source efforts, and by popular projects like Bootstrap, D3, and Leaflet to host their software documentation. Whenever you push to a specially named branch of your repository, the content is run through the Jekyll static site generator, and served via its own domain.

At GitHub, we’re a big fan of eating our own ice cream (some call it dogfooding). Many of us have our own, personal sites hosted on GitHub Pages, and many GitHub-maintained projects like Hubot and Electron, along with sites like help.github.com, take advantage of the service as well. This means that when the product slips below our own heightened expectations, we’re the first to notice.

We like to say that there’s a Venn diagram of things that each of us are passionate about, and things that are important to GitHub. Whenever there’s significant overlap, it’s win-win, and GitHubbers are encouraged to find time to pursue their passions. The recent improvements to GitHub Pages, a six-month sprint by a handful of Hubbers, was one such project. Here’s a quick look back at eight lessons we learned:

Before touching a single line of code, the first thing we did was create integration tests to mimic and validate the functionality experienced by users. This included things you might expect, like making sure a user’s site built without throwing an error, but also specific features like supporting different flavors of Markdown rendering or syntax highlighting.

This meant that as we made radical changes to the code base, like replacing the shell script with a fully-fledged Ruby app, we could move quickly with confidence that everyday users wouldn’t notice the change. And as we added new features, we continued to do the same thing, relying heavily on unit and integration tests, backed by real-world examples (fixtures) to validate each iteration. Like the rest of GitHub, nothing got deployed unless all tests were green.

One of our goals was to push the Pages infrastructure outside the GitHub firewall, such that it could function like any third-party service. Today, if you view your OAuth application settings you’ll notice an entry for GitHub Pages. Internally, we use the same public-facing Git clone endpoints to grab your site’s content that you use to push it, and the same public-facing repository API endpoints to grab repository metadata that you might use to build locally.

For us, that meant adding a few public APIs, like the inbound Pages API and outbound PageBuildEvent webhook. There’s a few reasons why we chose to use exclusively public APIs and to deny ourselves access to “the secret sauce”. For one, security and simplicity. Hitting public facing endpoints with untrusted user content meant all page build requests were routed through existing permission mechanisms. When you trigger a page build, we build the site as you, not as GitHub. Second, if we want to encourage a strong ecosystem of tools and services, we need to ensure the integration points are sufficient to do just that, and there’s no better way to do that than to put your code where your mouth is.

Developing a service is vastly different than developing an open source project. When you’re developing a software project, you have the luxury of semantic versioning and can implement radical, breaking changes without regret, as users can upgrade to the next major version at their convenience (and thus ensure their own implementation doesn’t break before doing so). With services, that’s not the case. If we implement a change that’s not backwards compatible, hundreds of thousands of sites will fail to build on their next push.

We made several breaking changes. For one, the Jekyll 2.x upgrade switched the default Markdown engine, meaning if users didn’t specify a preference, we chose one for them, and that choice had to change. In order to minimize this burden, we decided it was best for the user, not GitHub, to make the breaking change. After all, there’s nothing more frustrating than somebody else “messing with your stuff”.

For months leading up to the Jekyll 2.x upgrade users who didn’t specify a Markdown processor would get an email on each push, letting them know that Maruku was going the way of the dodo, and that they should upgrade to Kramdown, the new default, at their convenience. There were some pain points, to be sure, but it’s preferable to set an hour aside to perform the switch and verify the output locally, rather than pushing a minor change, only to find your entire site won’t publish and hours of frustration as you try to diagnose the issue.

We made a big push to improve the way we communicated with GitHub Pages users. First, we began pushing descriptive error messages when users’ builds failed, rather than an unhelpful “page build failed” error, which would require the user to either build the site locally or email GitHub support for additional context. Each error message let you know exactly what happened, and exactly what you needed to do to fix it. Most importantly, each error included a link to a help article specific to the error you received.

Errors were a big step, but still weren’t a great experience. We wanted to prevent errors before they occurred. We created the GitHub Pages Health Check and silently ran automated checks for common DNS misconfigurations on each build. If your site’s DNS wasn’t optimally configured, such as being pointed to a deprecated IP address, we’d let you know before it became a problem.

Finally, we wanted to level up our documentation to prevent the misconfiguration in the first place. In addition to overhauling all our GitHub Pages help documentation, we reimagined pages.github.com as a tutorial quick-start, lowering the barrier for getting started with GitHub Pages from hours to minutes, and published a list of dependencies, and what version was being used in production.

This meant that every time you got a communication from us, be it an error, a warning, or just a question, you’d immediately know what to do next.

While GitHub Pages is used for all sorts of crazy things, the service is all about creating beautiful user, organization, and project pages to showcase your open source efforts on GitHub. Lots of users were doing just that, but ironically, it used to be really difficult to do so. For example, to list your open source projects on an organization site, you’d have to make dozens of client-side API calls, and hope your visitor didn’t hit the API limit, or leave the site while they waited for it to load.

We exposed repository and organization metadata to the page build process, not because it was the most commonly used feature, but because it was at the core of the product’s use case. We wanted to make it easier to do the right thing — to create great software, and to tell the world about it. And we’ve seen a steady increase in open source marketing and showcase sites as a result.

If we did our job right, you didn’t notice a thing, but the GitHub Pages backend has been completely replaced. Whereas before, each build would occur in the same environment as part of a worker queue, today, each build occurs in its own Docker-backed sandbox. This ensured greater consistency (and security) between builds.

Getting there required a cross-team effort between the GitHub Pages, Importer, and Security teams to create Hoosegow, a Ruby Gem for executing untrusted Ruby code in a disposable Docker sandbox. No one team could have created it alone, nor would the solution have been as robust without the vastly different use cases, but both products and the end user experience are better as a result.

Expectations are a powerful force. Everywhere on GitHub you can expect @mentions and emoji to “just work”. For historical reasons, that wasn’t the case with GitHub Pages, and we got many confused support requests as a result. Rather than embark on an education campaign or otherwise go against user expectations, we implemented emoji and @mention support within Jekyll, ensuring an expectation-consistent experience regardless of what part of GitHub you were on.

The only thing better than meeting expectations is exceeding them. Traditionally, users expected about a ten to fifteen minute lag between the time a change was pushed and when that change would be published. Through our improvements, we were able to significantly speed up page builds internally, and by sending a purge request to our third-party CDN on each build, users could see changes reflected in under ten seconds in most cases.

Jekyll may have been originally created to power GitHub Pages, but since then, it has become its own independent open source project with its own priorities. GitHubbers have always been part of the Jekyll community, but if you look at the most recent activity, you’ll notice a sharp uptick in contributions, and many new contributors from GitHub.

If you use open source, whether it’s the core of your product or a component that you didn’t have to write yourself, it’s in your best interest to play an active role in supporting the open source community, ensuring the project has the resources it needs, and shaping its future. We’ve started “open source Fridays” here at GitHub, where the entire company takes a break from the day-to-day to give back to the open source community that makes GitHub possible. Today, despite their beginnings, GitHub Pages needs Jekyll, not the other way around.

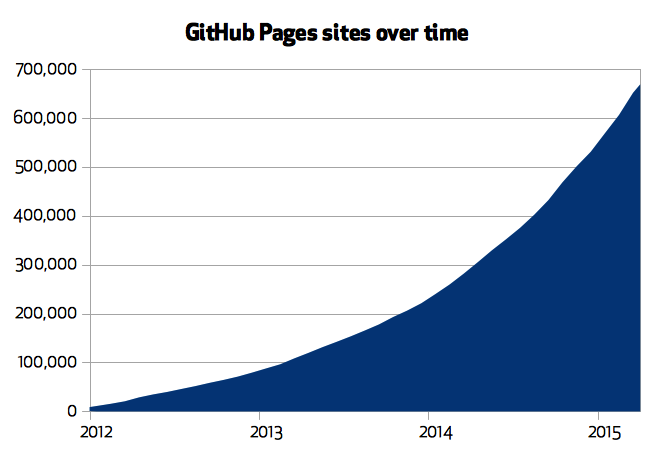

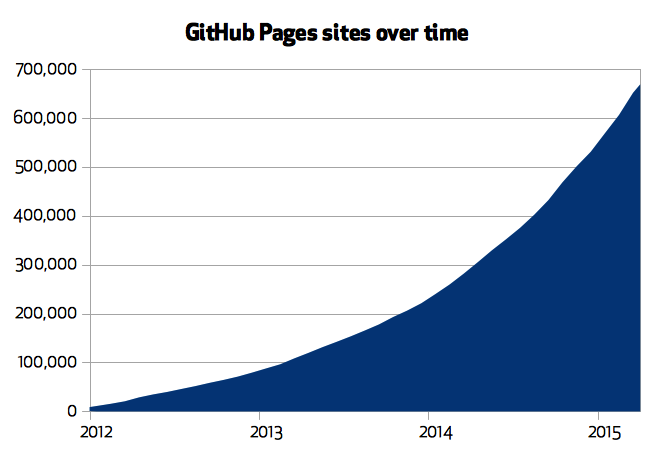

Throughout all these improvements, the number of GitHub Pages sites has grown exponential, with just shy of a three-quarters of a million user, organization, and project sites being hosted by GitHub Pages today.

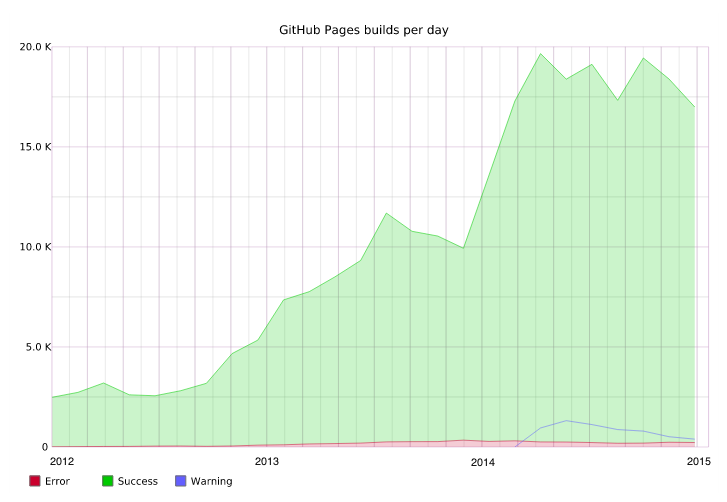

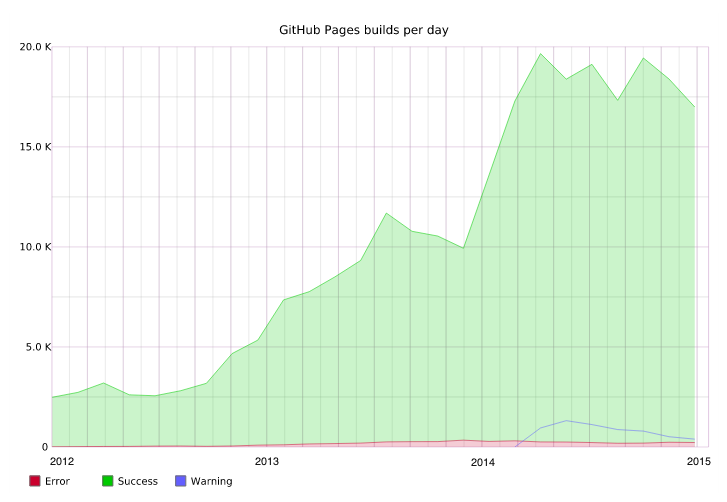

But the number of sites tells only half the story. Day-to-day use of GitHub Pages has also seen similar exponential growth over the past three years, with about 20,000 successful site builds completing each day as users continuously push updates to their site’s content.

Last, you’ll notice that when we introduced page build warnings in mid-2014, to proactively warn users about potential misconfigurations, users took the opportunity to improve their sites, with the percentage of failed builds (and number of builds generating warnings) decreasing as we enter 2015.

GitHub Pages is a small but powerful service tied to every repository on GitHub. Deceivingly simple, I encourage you to create your first GitHub Pages site today, or if you’re already a GitHub Pages expert, tune in this Saturday to level up your GitHub Pages game.

Happy publishing!

Written by

Ben Balter is Chief of Staff for Security at GitHub, the world’s largest software development platform. Previously, as a Staff Technical Program manager for Enterprise and Compliance, Ben managed GitHub’s on-premises and SaaS enterprise offerings, and as the Senior Product Manager overseeing the platform’s Trust and Safety efforts, Ben shipped more than 500 features in support of community management, privacy, compliance, content moderation, product security, platform health, and open source workflows to ensure the GitHub community and platform remained safe, secure, and welcoming for all software developers. Before joining GitHub’s Product team, Ben served as GitHub’s Government Evangelist, leading the efforts to encourage more than 2,000 government organizations across 75 countries to adopt open source philosophies for code, data, and policy development.